Some days ago, I made this bold claim that categories really just model theories of sets (with additional structure). You can read the detailed spiel here, but I’ll summarise the main points below:

- Given an object

, we have a set

of “

-shaped” elements of

, for any object

. Therefore,

is really a collection of “generalised elements”.

- By thinking of

in terms of its generalised elements, we can do set theory in any category! First example: a morphism

is monic if it is injective on generalised elements—hence, we have an analogue of injectivity of functions!

- Second example: the categorical product

is just the collection of pairs

where

is a generalised element of

, and

is a generalised element of

—hence, we have an analogue of the cartesian product of sets!

I justified all this by appealing to the Yoneda lemma. However, I have only told half of the story. What about the many other set-theoretic constructions? Sometimes, generalised elements just don’t cut it, and for good reason.

Let’s look first at trying to translate surjectivity of functions to category theory. A function of sets is surjective if we can find for any element

some element

such that

. So, why not define a morphism of a general category to be “surjective” if it is surjective on generalised elements? This turns out to be no issue in

, but this is for special reasons (as we will see in a bit). Let’s see some of several places where morphisms that should be surjective fail to be by this definition:

- In

: consider the canonical group homomorphism

from the integers to the group of integers modulo two, which sends an integer to its remainder modulo two. This is obviously surjective on genuine elements (and it’s a projection onto a quotient!), but it actually fails to be surjective on all generalised elements. For one example, we have two generalised elements of shape

in

—one is trivial, and the other sends an integer modulo four to its remainder modulo two—but we only have one generalised element of the same shape in

(the trivial one)..

- In

: you can use the same example, viewing the above groups as rings.

- In

: take any set

with at least two elements, then consider the identity function

, where we view

as having the discrete topology, and

is the same set, but with the codiscrete topology. This is even a bijective continuous function, but it’s still not surjective on generalised elements! (In fact, it’s only surjective on generalised elements of a discrete shape.) Consider the unit interval

: the

-shaped points of a space are the paths in the space. However, the only paths on the discrete space

are constant, whereas all maps

are paths on

.

Before we talk about what the correct notion of surjectivity should be in a general category, what’s actually going here? Well, the problem is that the axiom of choice fails for all of these categories:

Proposition 1: In any category, a morphism is surjective on generalised elements if and only if it has a section (a right inverse); that is, there exists a morphism

such that

. Such a morphism is called a split epimorphism.

Proof. It’s clear that if has a section

, then it is surjective on generalised elements: for any generalised element

of

, just take the generalised element

of

, then

. In the other direction: if

is surjective on generalised elements, then there must be a generalised element

of

that corresponds to the element

of

—this is precisely a section of

.

(This also exposes that for all of my examples, I picked needlessly complicated generalised elements as counterexamples to this strong notion of surjectivity.) In the category , this means that a function is surjective if and only if it is surjective on generalised elements. This is because the axiom of choice is the statement that every surjective function of sets admits a section.

Here’s another example where generalised elements fail to be helpful: the disjoint union of sets is defined to be the set

that consists of a distinct copy of every element of

and a distinct copy of each element of

. In a general category, then, why not define the “disjoint union” of objects

to be—when it exists—the object whose

-shaped elements are those of

along with those of

? In other words, a map

corresponds either to a unique map

or a unique map

. The issue here is even more severe—this doesn’t even work in

: this says that a function

has to either map entirely into

or otherwise entirely into

, but we can easily construct functions

that map partially into

and partially into

(for example, just take the identity map

).

So what do we do? Well, a very loose hint is that surjectivity and disjoint unions seem somehow associated with injectivity and cartesian products, respectively. You might even be so bold as to say they feel “dual” to each other. I wish I could remember if this “duality” felt like something that should be true to me when I was a naïve little first year who just learned these things (yeah, I didn’t learn formal maths until I entered university). Regardless, there is some evidence that could loosely suggest the existence of some elusive duality in each case:

- Given a function

, injectivity only concerns itself with the domain

, whereas surjectivity is about the codomain

. There’s also plenty of dual-looking facts about injectivity and surjectivity. For instance, a function with a left inverse is always injective, while a function with a right inverse is always surjective. Also, given functions

and

, we have that injectivity of

implies injectivity of

, whereas surjectivity of

implies surjectivity of

.

- The “duality” between the cartesian product and disjoint union might seem like more of a stretch than the above duality (unless, of course, you already know where to look). Here’s a convoluted way to do it. It doesn’t take many mental leaps to agree that the cartesian product is to multiplication of numbers as the disjoint union is to addition—I mean, if the sets are finite, then

and

. This might be enough if you already think addition and multiplication are “dual” to each other in some way. Otherwise, restrict multiplication and addition to

and identify 0 with “false” and 1 with “true” (this might be natural if you’re a computer scientist), then you’ll find that multiplication reduces to conjunction (“and”), and addition reduces to disjunction. Now we’re in business: these really are dual to each other, and not just in name. By De Morgan’s Laws, the negation of conjunction is disjunction, and the negation of disjunction is conjunction!

The above discussions should at least convince you that maybe—just maybe—there’s some sort of underlying duality at work here. To see what this duality really is, here’s another argument for the disjoint union being dual to the cartesian product (and this time, it’s a lot less “forced”). Think about how you would define a function , and compare it with how you would define a function

. For the cartesian product, it’s enough to define functions

and

, and then the function

would just send

. For the disjoint union, it’s similarly enough to define functions

and

, as the corresponding function

would be given by sending

to

and

to

.

In fact, this is the duality! The disjoint union is just like the categorical product, except the morphisms are all pointing backwards. In fact, the same can be said about surjections (when compared to injections): a function is surjective if and only if for every pair of functions

such that

, it must have already been the case that

. Indeed, the reason for this is because

being surjective allows us to “see all of

” through

, and so we would have been able to catch any

for which

. What’s great is that we don’t need to work that hard to make dual definitions (so for any definition in category theory, you get one free!) thanks to opposite categories:

Definition 2: Given a category , its opposite category is the category

whose objects are the same as those of

, and whose morphisms

are precisely the morphisms

in

. In particular, this means that composition is defined by

, and the identity morphisms are just

.

With access to opposite categories, we can define the appropriate analogues of surjections and disjoint unions in arbitrary categories. For the analogue of surjections: a morphism is called an epimorphism if

is a monomorphism in the opposite category. For the analogue of disjoint unions: the coproduct

is just the categorical product in the opposite category.

- The proof that surjective functions in

are epimorphisms translates easily to many categories of “set-like” objects (e.g.,

,

,

,

) to show that all surjective functions are epimorphisms.

- Be careful about the converse, as it is not always true: the ring homomorphism

is in fact an epimorphism. That being said, epimorphisms in

are surjective thanks to the existence of codiscrete spaces, epimorphisms in

are surjective by looking at its cokernel, and epimorphisms are also surjective for groups.

- Be careful about the converse, as it is not always true: the ring homomorphism

- If you think of a (partially) ordered set

as a category, then the coproduct of elements

—if it exists—is their join (or supremum)

. In particular, let

(where we think of 0 as false and 1 as true), then the categorical product in

is conjunction, and the categorical coproduct in

is disjunction!

- The coproduct of topological spaces is just given by their disjoint union.

- The coproduct of groups is given by the free product

. The relation to the disjoint union of sets is as follows. We can present any group

as a set

of generators subject to a set

of relations—written as

. Then, the free product of two groups

and

is just the group

.

- The coproduct of commutative rings is given by their tensor product (as

-algebras)

.

- The coproduct of vector spaces is given by the direct sum

. This is because all vector spaces are free, so by picking a basis

and

, we just see that

. You might notice that the product of vector spaces is also given by the direct sum. This phenomenon actually happens in any category where morphisms can be “added” together (but note that this only works for finite (co)products—in the infinite case, we find that the product becomes strictly bigger!)

We can also define a “generalised coelement” of an object to be a generalised element in the opposite category, and therefore

-shaped coelements of

are just morphisms

. We then can make the definitions of epimorphisms and coproducts (and more!) just as precise as their duals:

| Notion | Definition | Dual Definition | Dual Notion |

|---|---|---|---|

| monomorphism | injective on generalised elements | injective on generalised coelements | epimorphism |

| split epimorphism | surjective on generalised elements | surjective on generalised coelements | split monomorphism |

| product |

objects whose |

objects whose |

coproduct |

Moreover, you can make things precise just as in my post about elements and characterise objects completely (up to isomorphism) by their coelements, yielding the notion of (corepresentable) copresheaves so that the above definition of a coproduct makes sense as the corepresenting object for the copresheaf

. The Yoneda Lemma applies here as well to tell us that the elements (not coelements!) of

for any copresheaf

correspond precisely to the

-shaped coelements of

itself so that this all makes sense.

Side note: The above statement is not the co-Yoneda Lemma! The co-Yoneda Lemma tells us that any presheaf can be computed as the coend

—in particular, this tells us that every presheaf can be computed as a colimit of representable presheaves. The reason that this statement is called the “co-Yoneda Lemma” is because the Yoneda Lemma can be equivalently stated as an end formula that

(the right hand side is exactly the set of

-shaped elements of

).

So what are generalised coelements a generalisation of?

Recall that generalised elements generalise elements by viewing them as maps . Is there an actual “coelement” (of a set) that we could think of generalised coelements as a generalisation of? An answer to this question should have some similar properties to those of elements (of sets, spaces, groups, etc.). The key property, perhaps, is that elements allowed us to completely characterise the behaviour of morphisms in these concrete categories. Of course, there is a formal definition for this kind of property:

Definition 3: An object is called a separator if the corepresentable functor

is faithful. In other words, if

are morphisms such that

for every

, then in fact

; that is, a morphism is completely determined by its action on

-shaped elements.

This means our desired “coelement” should be a generalised coelement whose shape is given by a coseparator—that is, a separator in the opposite category. To figure this out, it might help to answer this other pressing question: what really is the category ?

Surprisingly, we can be more concrete than just saying “it’s with its arrows reversed.” A motivating question to help us out would be: given a function

(of sets), is there a canonical way to reverse it and think of it as a function

in the other direction? A knee-jerk instinct would be to say that

whenever

, but this clearly doesn’t define a function—it would only be a function if

were bijective, in which case we find that

. Nonetheless, this turns out to be on the right track. It’s just that we have to make a few adjustments so that it actually works.

may not be well defined for some

because there may be several (or no)

with

. We can fix this and make it a function by taking

a subset of

; that is, just say

. Now it’s a function

into the power set (set of subsets) of

. We’re not quite done, because this seems a bit asymmetric. Fortunately, we can extend

to the power set of

too: for

, just define

. This is a well-defined function, and is called the preimage function

.

You can check that and

, so this shows that taking power sets defines a functor

. It’s also not hard to see that this functor is faithful. Therefore,

is equivalent to some category whose objects are “sets that look like power sets” and whose “functions look like preimages”. Therefore, we just need to articulate what kind of structure power sets have (that typical sets don’t), and then we need to articulate how this structure is preserved by preimages:

- The elements of a power set are sets, so it’s reasonable to think about taking unions and intersection. In fact, we can take arbitrary unions and intersections: if

for every

, then

and

. Moreover, these operations distribute over each other: if

for

and

, then

.

- Regarding preimages, notice that if

, then

and

. In other words,

preserves all unions and intersections.

- Regarding preimages, notice that if

- Similarly, we can take complements of subsets: if

, then

. The key property here is that

and

for every

.

- For preimages, we have that

, but this actually follows formally from preservation of unions and intersections (

and likewise for unions), so we don’t really need to pay attention to this.

- For preimages, we have that

- We can partially order subsets with the

relation. Under this relation, the unions are the joins (i.e., coproducts), and the intersections are the meets (i.e., products). Note that preimages preserve this relation: if

, then

.

- Last thing: the subsets of

are built from the elements of

: that is, every

defines a unique subset

such that for any

we have

.

This is all the structure we need to describe power sets and preimages: these properties of power sets axiomatise those partially ordered sets that are complete atomic Boolean algebras. Accordingly, define the category to be the category whose objects are these complete atomic Boolean algebras, and whose morphisms are the monotone functions that preserve all joins and meets (called complete homomorphisms), then we find:

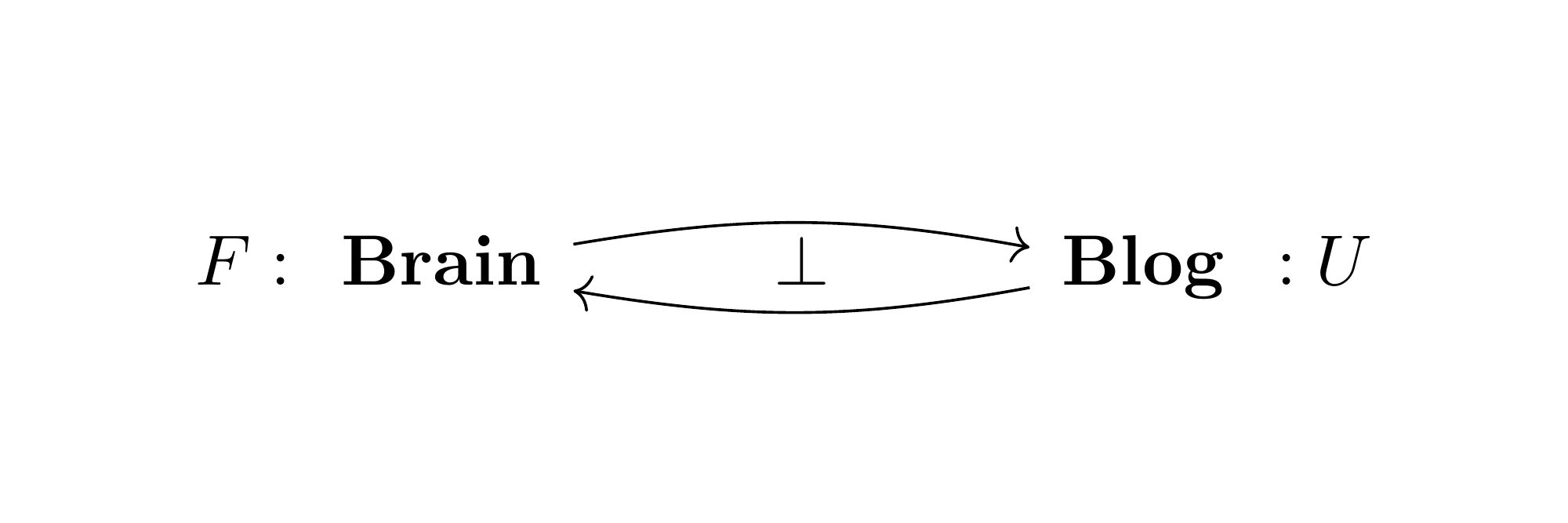

Proposition 4: The power set functor defines an equivalence of categories .

The inverse of is not too surprising: given a complete atomic Boolean algebra

, let

be its set of atoms. Given a complete homomorphism

, we get a function of atoms

(in the other direction!) which sends an atom

to the (necessarily unique) atom

with

. The reason

exists is because

has to be equal to

; such an

is unique because for

in

, we have

. Therefore, we get the (weak) inverse

.

Now, to seal the deal, we need to find a separator for . Well, we already have a faithful forgetful functor

that just remembers the elements of a complete atomic Boolean algebra (not just the atoms!), so it would be enough to find a corepresenting object for

. This might not seem like much help, but from Proposition 4, we can instead rephrase this as finding a representing object for the power set functor

—that is, a set

such that elements of

correspond to functions

.

Answer: take . Indeed, given a subset

, we have its indicator (or characteristic) function

where

when

and

otherwise. Conversely, given a function

, this defines the subset

. There we go—genuine coelements of

are the subsets!

Side note: forgetful functor actually has a left adjoint given by

(except that

in

is sent to the direct image

, where

is sent to

). Because of this adjunction, the corepresenting object for

is immediately determined to be

(see the side note in my post about adjunctions to see why). There are only two subsets of the singleton:

(corresponding to 0) and

(corresponding to 1).

With this newfound understanding of coelements as subsets, we can re-describe surjections and disjoint unions of sets. A function is surjective if and only if its preimage is injective on subsets. Likewise, the disjoint union of

and

is the set

whose subsets are given by pairs of subsets

and

(indeed,

comes from

and

).

2 thoughts on “(Co-)Elementary, my dear Watson”