- The integral

- Winding paths, up and down hills

- Going great lengths on Earth

- Measuring great lengths on Earth

Road trip!

Well, honestly, you’re not exactly the biggest fan of the road part, especially not as a passenger. Choosing not to partake in the off-tune, off-rhythm “car-aoke,” you tune in to your music player and try to find some way to entertain yourself. With all the visual stimuli at your disposal, you find your eyes focusing on the speedometer needle. You’re a long way from home, and—

—wait, how long from home, exactly?

You could probably just check your phone, but it’s not like your exact whereabouts are particularly important. There’s no rush, so why not try to kill some time by figuring this out some other way? You’ve been watching the speedometer for so long, surely that should give enough information to answer your question. So, how do you do it?

Well, suppose the car had been driving at a constant velocity—say, one hundred kilometres per hour due east—then as long as you knew how long you have been on the road, you can figure out how much ground you have covered. For instance, if you were on the road for an hour at one hundred kilometres per hour due east, then you have travelled one hundred kilometres east. After two hours, you would have travelled two hundred kilometres east. After only half an hour, you would have only travelled fifty kilometres east. The “formula” you use to determine this is the definition of velocity rewritten:

In fancier (i.e., mathematical and physical) notation, this would be written as

Of course, the car was not driving at a constant velocity… in fact, the velocity seemed to always be changing. How do you account for a continuously changing velocity? Although the car’s velocity is never constant, it doesn’t seem to be changing too drastically. Therefore, you get the idea: sample the velocity every minutes, and then assume that the velocity remains “pretty much constant” in each interval.

More precisely, if you sample at time and find that the velocity is equal to

at that time, then you just pretend that the car remains at velocity

for the entire

-minute time interval. Under this assumption, the ground covered in these ten minutes is approximately

Sampling at times , all ten minutes apart, you use the above computations to determine the ground covered in each time interval. The total ground covered is then just the result of adding all of these together.

Adding up many terms that “look similar” happens a lot in mathematics, so we usually shorten the above sum using “Sigma notation:”

The notation just means to add the terms

where

(which is exactly the equation before). Now, you already computed that

, so just plug this into the above formula:

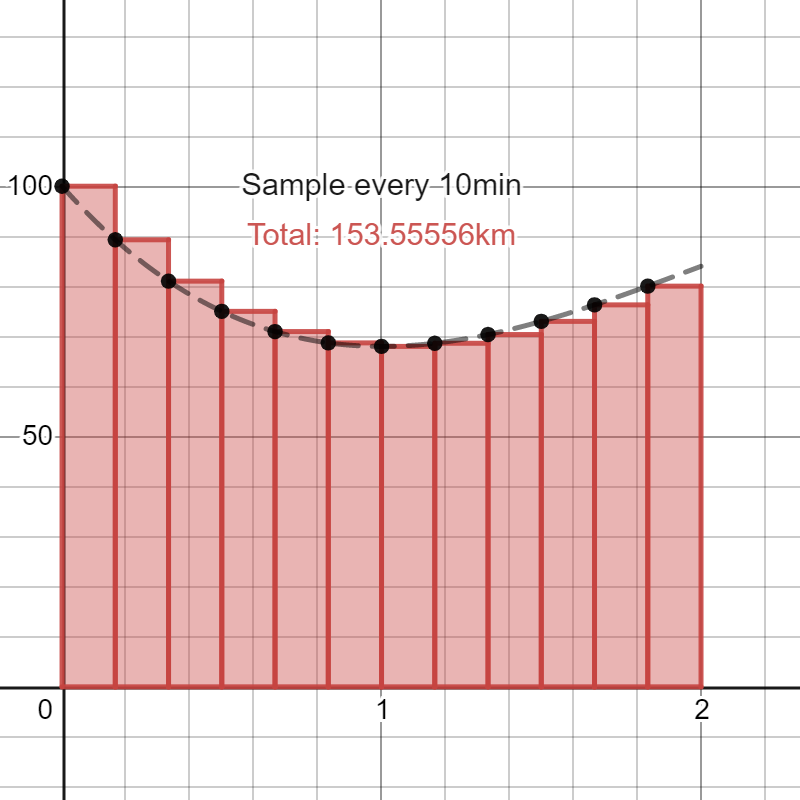

You can visualise this calculation: if you plot the velocity of the car over time, and then sample every minutes, the above calculation computes the area in red in the figure below.

As you can see, the red region is approximately the area under the curve. Thinking back to when you were computing the instantaneous velocity of the falling apple, you figure that your approximation of the total distance covered would get better if you sample more frequently (that is, if you take to be ever decreasing), since the velocity will be closer to being constant in smaller time intervals:

(You can experiment with this yourself on Desmos.) Because the approximation keeps approaching the true total distance covered, you realise that the total distance covered is the limit of these approximations as you take . To summarise, you find that

(Working this out for the velocity function in the above picture, you get that the distance travelled is exactly 152km.) Even though you’ve figured out how to solve the problem you gave yourself, there’s still plenty of road left, so you let your mind continue off this tangent.

The integral

If you replace with an arbitrary function

, it no longer makes as much sense to ask “how far have we travelled,” but the visualisation of the computation still makes sense: the above formula can be used to compute the area under the graph of a function! Suppose I wanted to measure the area under a function

where

. Then, just as before, I would sample

at various input values

that are all some

apart, compute the areas of the rectangles of width

and height

, and then sum them up. As long as I assume that my function is nice enough (meaning that my function “looks constant” if I take

small enough—such a function is called uniformly continuous), then I should get a pretty good approximation for the area, and the approximation should get better and better as I take

.

To summarise: for a (uniformly continuous) function , the area of the region under

is given by

This notation kind of hides and

(implicitly,

, and

), so to be more explicit, we could abuse notation a little bit and write the above expression instead as

where the summation reads as “sum over values of that start at

, and go up by

until you reach

.” The key, here, is that we are taking

, which is very similar to what you did to take derivatives of functions. Remember that

is the capital Greek letter “D” and represents “change,” so if you wanted to refer to a “really small change,” suggestive notation would be to use a little

. Therefore, if

is a change in

, then

is a “small” change in

.

Likewise, when , the above sum becomes increasingly fine (you sum over the function with increasing resolution), almost making it “smooth.” Since

is the capital Greek letter “S” (for “sum”), then a smooth sum should be written with a smoother “S” (such as our own English “S”). Adopting this suggestive notation, we get to the more modern notation for the above sum:

This is called the integral of on the interval

. As per the above discussion, this computes the area* under

in this interval.

*Technical point. Whenever , the “area under the curve” is negative, so this is more appropriately some kind of “signed” area.

While this does give us a precise way of computing the area under a curve, this is quite tedious to do directly… there’s no way you’d determine how far you travelled by integrating your velocity over the time travelled! If you wanted to determine how far you travelled from time to time

, it would be much simpler to take your final position

and take its difference from your initial position

(the mathematical equivalent of just checking your location on the phone).

… So would we able to do a similar thing for other functions? We have two ways of computing how far we travelled: on one hand, we could take the velocity over time and integrate; on the other hand, we can just take the difference of positions (way easier). If they’re both done correctly, then they have to agree, so this gives us a formula

To extend this formula to other functions, you need a way of connecting velocity and position. This is when you remember: velocity is the derivative of position with respect to time!

This means that if is the derivative of some other function

(i.e.,

), then we can view

as a “velocity function” and

as the “position function” and proceed just as you did in the car, and we obtain:

Fundamental Theorem of Calculus. If , then

This dramatically simplifies integration from being near impossible to being near impossible only sometimes.

This is all fine and dandy, but then you hear your old physics teacher in your ear: velocity is a vector. Looking out the window, you realise the driver has been taking the scenic route (in the middle of the night)… all of your calculations have been based on just the speed of the car, and so you’ve only figured out how to calculate the total mileage of the vehicle.

So, you redo the entire computation, but this time using a vector-valued function , which at time

gives you the horizontal and vertical components of the velocity of the vehicle. If you divvy up time into small intervals of length

again (and treating the velocity as constant in each interval), you realise that you’re just doing exactly the same thing as before, but on each component: the total displacement per interval is just a vector whose first component is the horizontal displacement

, and whose second component is the vertical displacement

. Therefore, you realise that

Let be the position (i.e., coordinates) of the vehicle at time

, where

. You don’t know what this function is (since you’re still too proud to just check the map), but you remember that its (total) derivative is exactly the velocity vector! Specifically, the horizontal (resp. vertical) component of the velocity is just the derivative of the horizontal (resp. vertical) component of the displacement with respect to time:

Therefore, you realise that the Fundamental Theorem of Calculus immediately generalises to higher dimensions:

You now lie back, relieved that you’ve accounted for everything. You can finally rest. You look out and watch the moonlit trees as they march over the gentle hills of their domain—

—hills?

You jolt back awake. This isn’t Saskatchewan! You forgot to account for vertical displacement. First, you shrug: if you just use a 3D velocity vector , then the calculation is exactly the same as before, since you just need to integrate each component separately. However, you realise that you don’t really know how to determine your elevation just from sitting in the car… there isn’t really any reference point. You take a drink from your water bottle and collect your thoughts.

Winding paths, up and down hills

That’s when you notice: the surface of the water in your bottle is always level! This gives you an absolute reference point from which you can determine the slope of the car at any point! You collect your data and record that whenever you are in position on the map, the vehicle makes a vertical slope of

in the direction of the vehicle. Now, you have enough information to calculate your vertical displacement through the trip—it’s just a matter of using the information properly. Recall that the (vertical) slope calculates the ratio

We know the slope, and we can calculate how far we travelled. What we want to know is our change in height, so rearrange the formula as

In fancier symbols, we can just write this as

where indicates your vertical position (height), and

denotes your position on the road (we originally used

for position, but now that position is 2D we change letters).

Look familiar? This calculation assumes that the slope remains constant throughout the distance travelled, but since the roads are hilly, we can only use this as an approximation. By sampling more frequently and taking

, we can assume that the slope is constant in each interval and then add up these quantities. This is just another integral, so we determine that the overall change in height is just given by the integral

where denotes the 2D curve describing the entire road trip (i.e., the trace of

for

). While this is symbolically nice, we haven’t actually determined how to calculate

…

Again, to simplify the calculation, pretend that (for a short distance) the car travels in a straight line. If you knew that the car travelled and

in each coordinate (i.e., east and north, respectively), then the Pythagorean Theorem would tell you what the total change of distance is:

Of course, you don’t actually know the car’s eastward and northward displacements at any position directly: you just know the car’s velocity vector. Fortunately, this is all you need: assuming the eastward (resp. northward) velocity of the car remains constant for a short period of time, then the total eastward (resp. northward) displacement is just given by (resp.

). Plugging this into the above Pythagorean equation, we get

Notice that completely factors out! The expression that’s left is the length (i.e., Euclidean norm) of the velocity vector

—this is precisely the speed of the car! This shouldn’t be a surprise: we’ve just re-calculated that the distance travelled in that time interval is the speed of the car multiplied by the time interval:

If you integrate this quantity over the entire curve , you just recover what you did at the very beginning: the total mileage of the car is the integral of the car speed over the duration of the trip. Therefore, “

” is often called the length element. We are finally in a position where we can compute the line integral

. To give this a convenient overall formula, recall that

is the total derivative of the coordinates of the car over time. This gives us the formula

If we write , then the above formula has an even more explicit form:

This calculates the total vertical displacement of the car during the road trip, but bells continue to ring in your mind (no, not Christmas bells): if calculates a slope of some kind, then isn’t it a derivative of something? Let

denote the actual elevation of the land at any point

, then indeed

is the directional derivative of

in the direction of the car’s velocity at the point

.

Remembering back to computing derivatives of higher-dimensional functions, you remember that directional derivatives can be computed using the gradient : the directional derivative of

in the direction of the unit vector

is given by

It’s important that is a unit vector in this calculation. If we are given a general (nonzero) vector

, then the unit vector describing its direction can be calculated by normalising it as

. Therefore, the slope function

can be written in terms of

as

Interesting: if you recall, . This means that integrating the above quantity with respect to the length element causes the norms

to cancel out! Therefore, plugging this in (and remembering that

), we get that

If we write out the components of (which may be called a vector field) as

, then the above dot product may be expanded to give an even more explicit form

By “cancelling out” the terms, this gives us some of the more lazy notations for the same line integrals:

but I digress. In any case, because the total vertical displacement can be computed most simply as the difference , your derivation above can be summarised as:

Fundamental Theorem of Calculus for line integrals. If for some (

-variate) function

, and

is a curve that is parametrised by some

, then

Victory! Even you have to admit you’ve done an impressive amount of impromptu mathematics thus far. Surely you must be coming close to your travel destination by now.

Don’t call your driver Shirley: to your surprise, there is still quite a ways to go. “Unbelievable,” you think. “With how much time has passed, we must have travelled halfway around the world by now!” Your exasperation comes to an abrupt halt: around the world? Egad; you haven’t accounted for the curvature of the Earth.

Going great lengths on Earth

Fortunately, you already have the groundwork necessary for talking about calculus on Earth: smooth manifolds. For simplicity, you model the Earth with the standard 2-sphere

Of course, this is just a model. You know there are mountains and valleys on Earth, but you’ll deal with that issue later; the more pressing issue is how to make sure that you track distances on the sphere appropriately. Given two points on a 2-sphere, how do you determine their distance?

At face value, you could just embed the sphere into three-dimensional space and then measure the points’ Euclidean distance, but this measures the length of the straight line between the points. Given what you’re trying to do (measure mileage of the car on Earth), this isn’t a particularly useful notion of distance. A more relevant measurement is the length of arcs on Earth between the points, rather than straight lines. If you wanted to get the distance between points from the perspective of someone living on the 2-sphere, you could then just measure the smallest such length (which in this case would be the length of the geodesic connecting the two points).

Again, you could just embed the 2-sphere into three-dimensional (Euclidean) space and then measure the length of any arc through this embedding, but this is more of an extrinsic definition of arclength and could very well depend on how you embed the 2-sphere into Euclidean space, so it’s not an entirely satisfactory answer. Is there a way to measure these arclengths without an embedding?

To help answer this question, you revisit your formula for arclength in Euclidean space. If is a curve parametrised by a (smooth) function

, then the length of

was given by the integral

In English, the length of the curve is just determined as follows: have a particle travel along the curve and track its speed over time (this is ), then add this over the entire duration of the travel. On a manifold

, an arc is replaced by the trace of a function

, so what would the analogue in this setting look like?

If you picture as parametrising the path of a particle on the manifold, then you recall that the velocity of the particle at a given time

is precisely a tangent vector of

at the point

; that is, an element of

. The speed of the particle would then be the length of this tangent vector.

Since is a (real) vector space of dimension equal to the dimension of the manifold

(say of dimension

), it might be tempting to take the Euclidean length of these tangent vectors. However, there’s a small issue: the Euclidean metric on

is determined by the dot product, and the dot product is computed using the standard basis of

. Although

can be given a basis for every point

, there isn’t actually any canonical way of doing it in general. Usually, the basis is given by the partial derivatives

, but this basis depends on the choice of coordinate chart

at the point.

The point is that a canonical basis can’t be generally chosen for a manifold in a consistent way (manifolds which admit a basis of its tangent spaces that vary smoothly are called parallelisable). Therefore, to be able to measure arclengths, you need to endow the manifold with additional structure that gives a metric on tangent spaces in a “nice” way. This is done by endowing the manifold with a function

at every point

that looks like the dot product, and changes smoothly as you change

. A manifold equipped with such a structure is called a Riemannian manifold. The functions

form the so-called Riemannian metric.

Formal definition (skippable). A Riemannian -manifold is a pair

where

is a smooth

-manifold, and

assigns to every point

an inner product

smoothly in the sense that if

are local coordinates on an open set

, then for all

,

is a smooth function of (after identifying

via the local coordinates). We may also denote the Riemannian metric by

.

Given a Riemannian metric, you can then define norms on the tangent spaces in the same way as you would with the dot product in Euclidean space: the norm of a tangent vector is just

. Now, if

parametrises some curve

in our Riemannian manifold, then its length is the (ordinary) integral

Once you find an appropriate Riemannian metric on the 2-sphere, this would handle the total distance travelled by the car given that the Earth is perfectly spherical. This is a similar situation as when you forgot to account for hills. To account for mountains and valleys, you need to introduce a height function which gives you the elevation

at any point

. A suitable integral of this function should then compute the total vertical displacement.

By the same reasoning as in the Euclidean setting, you want to integrate the directional derivative of along the curve

. In this setting, the analogue of the gradient of

is just its derivative

. At any point

, this gives a linear functional

; that is, an element of the dual space of

.

Recall that the derivative of a function of manifolds is given by the Jacobian matrix when written in terms of (partial derivatives of) local coordinates. In particular, locally looks like the gradient of

at any point, and the composite

for a (unit) tangent vector

is therefore locally the same as the directional derivative of

in the direction

. Since directional derivatives are a local concept, this makes the analogy “solid.” In particular, integrating the directional derivatives over the arc recovers the same old integral computation as before: if

is a curve parametrised by the function

, then

In particular, the Riemann metric cancels out! Therefore, although we need a Riemannian metric to integrate with respect to arclength, Riemannian structure is unnecessary for integrating… things that look like .

What exactly are such things? In the Euclidean setting, we treated the gradient as a sort of vector field, but the fact that

is a map

shows that this perspective is not quite perfect. In particular, since we only used the gradient as a means to compute directional derivatives (via the dot product), it seems more appropriate to view the gradient as a covector field; that is, a smoothly varying family of linear functionals on the tangent space. We could afford oversimplifying the gradient as a vector field because finite-dimensional vector spaces have the same dimension as their dual space, but now it hampers our understanding of the theory.

But why would we prefer linear functionals on the tangent space more than actual tangent vectors when integrating? It may not entirely make sense why these are suitable: an integral is fundamentally a formalisation of an “infinite sum of infinitesimal quantities,” so what do linear functionals have to do with infinitesimals? To answer this, let’s try to be more precise about what “infinitesimal changes” should be on a manifold.

There is no one way to go about this, but first we should declare what we intend to measure infinitesimal changes of. This is fairly simple: for our purposes (integration), we’re mostly interested in infinitesimal changes of functions. Note that we’re talking about the infinitesimal changes themselves, rather than the infinitesimal rates of changes (we have already figured out the latter up to the generality of manifolds; in symbols, for a function , we are now more interested in

rather than

). Therefore, for the sake of integrating, we want to define a space

In particular, we’re less concerned with specific functions, and are more focused on their infinitesimal changes. Therefore, we should consider two functions defined around a point to be “the same” as elements of

if their infinitesimal changes are the same at the point

in some sense. Since we only care about how functions behave in the immediate vicinity of the point

, a step towards this end would be to consider two functions to be “the same” if they are equal in a sufficiently small neighbourhood of the point

. This defines the space

of germs of functions defined near the point

.

Germs of functions almost extract the infinitesimal information of a function at a point—they extract the local information of a function. However, since we’re more interested infinitesimal changes of a function, it doesn’t even matter to us what the function is equal to at : the infinitesimal changes of a function

ought to be the same as the infinitesimal changes of the translated function

, where

is some constant. We can go about ignoring the effect of this translation in two (equivalent) ways:

- (quotient) we can declare that two functions

are “the same” if their difference

is equal to a constant function

- (normalise) we can just restrict our attention to just those functions

where

Choose the latter for simplicity and define

Note that for any function , we can normalise it to get an element of

by replacing it with the difference

. This is how to show that the two approaches mentioned above are equivalent.

Remark (skippable). In fancy jargon, a ring of germs at a point is precisely a stalk of the sheaf of functions on the manifold: if is the ring of smooth functions on an open subset

, then

The reason we denote the subcollection of (germs of) functions that vanish at by

is because it is the unique maximal ideal in

.

The space encodes the “local perturbations” of a function, but unfortunately does not encode the infinitesimal perturbations. To see evidence of this, consider the functions below:

No matter how closely you zoom into the origin, these functions are never equal away from zero, meaning that their germs are different in . However, it seems like their infinitesimal perturbations at zero should be equal. The problem is most obvious when you consider the function

. At the origin, how would you describe its infinitesimal perturbation? There should be none: the function is essentially flat at zero. Therefore,

should be trivial in

.

You might notice at this point that although we’ve made a distinction between infinitesimal changes and derivatives, we’re essentially declaring that two functions have the same infinitesimal changes if their derivatives are the same! Therefore, we define to be the space

, but we think of two functions

as “the same” if

. This makes

really look like the space of infinitesimal changes of functions at

.

So what does this have to do with linear functionals on the tangent space? Well, it turns out that there is a natural identification of with the dual space

of the tangent space!

Indeed, the derivative defines a linear map by sending a function

to its derivative

. Since we identify functions in

if they have the same derivative, this linear map is injective. On the other hand, fix a local coordinate system

around

. Each coordinate is a local function, where

takes in a point near

and spits out its

th coordinate in this local coordinate system. This gives us

functions

in

, and it turns out that they are linearly independent! Indeed, this is best seen by checking their derivatives: now that we have local coordinates, derivatives in these coordinates look like gradients, and so in these coordinates,

showing that their derivatives are linearly independent in . Since this means we have an injective linear transformation from a space

of dimension

and a space

of dimension

, it follows that this transformation must be a linear isomorphism: there is a natural correspondence between infinitesimal changes of functions and linear functionals on the tangent space. What’s more, our above argument also proves that any local coordinate system near

gives us a basis of

given by the differentials

, and this basis is dual to the basis

of

in the sense that

Summary/Definition. For a smooth -manifold

and a point

, define the cotangent space at

to be the dual space

of the tangent space at

. Moreover, if

is a local coordinate system near

, then denote the dual basis in

corresponding to

by

.

Remark (skippable). The above work is just the first isomorphism theorem spelled out: using the local coordinate functions, we proved that the derivative map is surjective, and therefore induces an isomorphism

. On the other hand, in the discussion, we define the space

as precisely this quotient.

Taking one more step, we get a more useful definition of the cotangent space. Given two smooth functions that vanish at zero, their product must have a trivial derivative by the product rule. Therefore, we get an inclusion (where

is the ideal generated by products of elements of

). This inclusion is in fact an isomorphism (a high-powered reason is by looking at Taylor expansions in local coordinates), meaning

. This gives another equivalent definition of the cotangent space as the quotient

, which comes in handy for instance in algebraic geometry.

Using this newfound notation, we get a formalisation of the differential of a function discussed back when you studied derivatives. If we fix a local coordinate system around

, then the derivative of a function

looks like a gradient in the induced basis on the tangent space since

. Therefore, using the induced basis on the cotangent space, we recover the familiar formula

In particular, if we smoothly vary our choice of (as well as the associated local coordinates), the coefficients of each

vary smoothly as well. This gives us a better idea of what can be integrated over a curve in a manifold (i.e., what a covector field ought to be): a covector field

on our manifold assigns to every point

an element

in a way that “varies smoothly” with

. In local coordinates, such a covector field thus takes the form

, where

are smooth (local) functions. Therefore, such covector fields are also called differential 1-forms, and we denote the space of all differential 1-forms on

by

.

Remark. We make precise the idea of making choice of cotangent vectors “smooth” by constructing the cotangent bundle on

, which is done in a similar way to the tangent bundle

. In particular, the cotangent bundle is a smooth manifold with a projection map

(sending a cotangent vector in

to

). With the structure of a vector bundle, a differential 1-form is precisely a smooth section of the cotangent bundle, meaning that it is a smooth map

such that

; that is,

for every

.

Analogously, a vector field on is just a section of the tangent bundle; that is, a function

such that

for every

. In the presence of a Riemannian metric

, we get a canonical identification of vector fields with differential 1-forms by identifying the vector field

with the form

given by

.

Suppose we have a Riemannian metric on our manifold. Then, we can integrate a differential form

in the same way that we integrated the derivative of a function as before. Intuitively, given a unit tangent vector

, the value of

represents the infinitesimal change

in the direction

. Therefore, to accumulate these directional infinitesimal changes along a curve

parametrised by some function

, we proceed as before:

Once again, the final formula is left independent of the metric! Therefore, we can define the integral of a differential 1-form over curves on arbitrary* manifolds this way.

*Technical remark. In general, integration of forms requires first fixing an orientation of the manifold. This is implicit in the above formula, because it assumes that the curve is oriented (for a curve, this means it flows in one direction), and that the parametrisation

preserves this orientation. This is important because if you trace the curve in the other direction, then the resulting integral will be the negative of the original, just like how

. Once we’re in this situation, the integral

is given by the above formula. It may also be worth noting that every smooth manifold can be given some Riemannian metric.

It may have been so long that the original goal has been forgotten, but remember: all this was to understand how to integrate the derivative of a height function on the 2-sphere, and from entirely analogous analysis as in the Euclidean setting, this integral computes the total vertical (i.e., “outward” in the direction

measures) displacement accrued along the path travelled. In symbols:

Generalised Stokes’ Theorem in one dimension. If is a smooth manifold and

is an orientable curve that is parametrised by a smooth (orientation-preserving) function

. Then for all smooth functions

, we have

which further generalises the “fundamental theorems” of calculus established much earlier.

This would have been a good place to end things, but we haven’t yet established a Riemannian metric on the 2-sphere, so we can’t yet compute the actual arclength of the journey . Therefore, let’s end this by saying a few more words about this.

Measuring great lengths on Earth

First, the notation for the Riemannian metric suggests that—just like in the Euclidean setting when computing arclengths—the metric is somehow a product of differentials. While not precisely true, there is an element of truth to it. Recall that a Riemannian metric is a smoothly-varying assignment of inner products

on

. Inner products are, in particular, bilinear, and so can be instead described as a linear map from the tensor product

. This means that

is a particular element of the dual space

. Since tangent spaces are finite-dimensional, we can equivalently identify

with a particular element of

in a canonical way.

Let’s be more explicit about this. If we fix a local coordinate system , then the Riemannian metric is locally determined by the coefficients

for the

in this local coordinate system. With these coefficients, we may write the Riemannian metric in terms of the local coordinates as a sum

where is a smooth function of

, and (to be extremely explicit) the tensor product

is a bilinear form completely determined by the rule

For instance, the usual Euclidean metric on is defined by

, which (for brevity) may also be written in the familiar form

.

Remark (skippable). This perspective also allows us to be more precise about how a Riemannian metric varies smoothly: the Riemannian metric must in particular be a smooth section of the tensor product bundle . For this reason, the Riemannian metric is also often called a metric tensor. More generally, a tensor field of type

is a smooth section of the vector bundle

, showing that the Riemannian metric is a tensor of type

.

Now for the sake of completeness, let’s derive a metric tensor on the 2-sphere. We do so by parametrising the 2-sphere using usual polar and azimuthal angles (i.e., spherical coordinates)

where is a fixed radius (meant to be the radius of the Earth, in your situation). This makes

the coordinate system for the sphere via the mapping

. We then define the metric on the sphere by pulling back the Euclidean metric along

.

In general, pulling back a metric on

along some

gives a metric

on

such that for a local coordinate system

of

we have

. Therefore, the components of the pullback metric are determined by

where in a local coordinate system of

.

In our case, the off-diagonal components of the Euclidean metric tensor are zero, so the above expressio simplifies to . In particular:

- similarly

Therefore, the Riemannian metric on the 2-sphere we get as a result is

At last, you feel the car come to a stop, and your mates all cheer. You’ve arrived at your destination, and right on time for you to wrap up this train of thought! While not the most impactful way to finish the thought, you don’t mind because you know nobody else will follow your thoughts far enough to notice.

One thought on “How far along the tangent have we gone?”