- The derivative

- The derivative, in many variables

- “The Earth isn’t flat, but it’s close”

- The derivative of the Earth

The sun is shining, and the breeze entices the leaves of your grand apple tree to sing a joyous tune. You sit at the edge of the balcony and watch the tree branches dance to the rhythm of their song. Amidst the poetry, an apple is fed up with the palpable pretentiousness, and detaches himself from the scene, awaiting the soothing embrace of the grass blades beneath.

As the apple of your eye descends to the earth, you wonder… how fast did the apple fall after one second?

At first, this question seems entirely reasonable… until you realise it doesn’t really make sense. The speed of a car, for instance, is usually measured in kilometres per hour (that is, assuming you live in a country that uses metric), which tells you how many kilometres your vehicle would cover if you maintained the given speed for exactly one hour. In order to determine the (average) velocity of an object—such as a car, or your dear apple—you need to calculate

In fancier (i.e., mathematical and physical) notation, this would be written as

In particular, the calculation of velocity requires sampling at two separate times. Therefore, it’s rather nonsensical to ask for how quickly an apple is moving at a single specific time; that’s like taking a snapshot of the falling apple and trying to use this to determine its velocity.

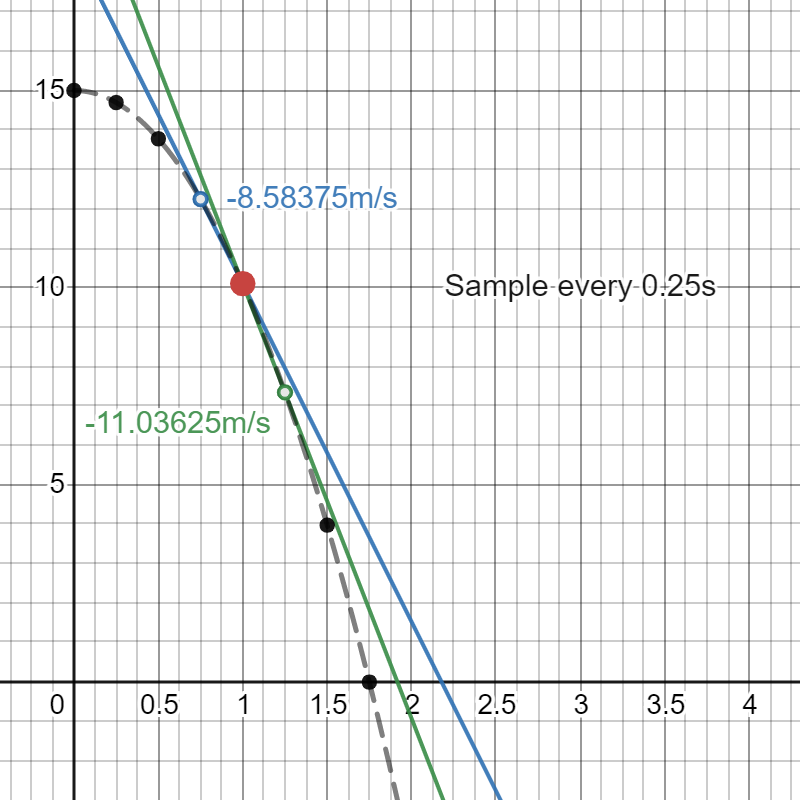

This doesn’t deter you, though, since there still seems to be some merit behind the concept of an “instantaneous velocity.” So, you try to approximate what this quantity should be. You try sampling the position of the apple every seconds and plot the result:

To estimate the instantaneous velocity at time (i.e., at the red dot), you consider the average velocity for the 0.5 seconds before the red dot, and also the average velocity for the 0.5 seconds after the red dot:

These tell you that what would be the instantaneous velocity must have been somewhere near 7.3 and 12.3 metres per second. To improve the approximation, you sample more frequently, thus making smaller and smaller.

(You can experiment with this yourself on Desmos.) As decreases, you notice that the blue and green lines start to stabilise, and with further investigating, the numbers seem to be converging to about 9.81 metres per second. Therefore, you declare that the apple fell at around 9.81 metres per second exactly one second after falling off the tree. This makes a lot of sense, so you declare this to be your definition of instantaneous velocity.

More precisely: if the position (say, of your apple) is given as a function of time, then the instantaneous velocity at a specific time is given to be the number that the average speed

gets really close to when you take

to be smaller and smaller. Since

is the Greek letter D and represents “change,” you might think it cute to denote “really small change” by a little

: that is, if

represents a “change in position,” then

should represent a “really small change in position.”

In mathematical language, the value approaches when you take

to be smaller and smaller is called the limit of

as

. Think of the limit as a constraint on

, forcing

to be really close to the limit when

is really close to zero. To summarise, your instantaneous velocity takes the symbolic equation

The derivative

Moving away from physics, you realise that you can do the same thing with other functions . The simplest functions are given by lines, which can be defined by the equation

. Here,

tells us where the line crosses the

-axis, but the more important parameter is

, which is called the slope of the line. Explicitly, the slope describes how quickly

changes based on how much

changes, which can be stated as

With the ideas behind instantaneous velocities, you realise that you can begin to make sense of instantaneous slopes of a function: if you have a function , then you can define its instantaneous slope at a specified point to be

The instantaneous slope at a point is more precisely called the derivative of

at the point

. Therefore, you can use this terminology to say that “velocity is the derivative of position with respect to time.”

However, you quickly realise that not all functions have derivatives. For example, the absolute value function

does not have a well-defined derivative at zero (sampling right before zero gives you a slope of while sampling right after zero gives you a slope of

; no matter how close together your samples are, they never approach any limit—in other words, the derivative does not exist). You observe that the cause of this problem is the sharp corner at zero: the derivative of a function only exists if the function looks sufficiently “smooth,” meaning that the function should already look like a line if you zoom in closely enough. Such functions are called differentiable: this is because computing the derivative is called “differentiation” (it computes the ratio between the difference of outputs and the difference of inputs).

In other words, if you look closely enough at the point , a differentiable function

should look something like

for an appropriate line. The slope of this approximate line is exactly the derivative:

.

What this means more concretely is that when you’re close to a point , then

looks approximately like the line

which is then called the linear approximation of near

. In mathematical symbols, this reads as

where denotes a quantity that is significantly smaller than

as

.

Note. If the change in is small enough (so that

), the above identity becomes

, which looks like an “obvious” identity, but is the more precise definition of the differential of

.

If you were to graph the linear approximation of a function (e.g., for the position of a falling apple), you get a better idea what this line really is:

For a sufficiently smooth function, the linear approximation gives us the line that lies tangent to our function at the point; that is, the line that seems to just “touch” our function without blatantly impaling it. By looking at the graph, you can also see that the linear approximation is actually quite good at estimating differentiable functions! This has great consequences for simplifying calculation and analysis:

is basically the same as

when

is very small[see for yourself].

The concept of tangents (like the above) is a way of formalising “flattening” shapes while retaining as much information as possible. This is the idea underlying why you can use a flat, two-dimensional map of a city and reliably eyeball how distances, angles, and sizes compare between different locations, despite the general spherical shape of the Earth: a city is so small compared to the whole Earth that a map—which is exactly a (scaled-down) linear approximation of the true city—barely differs in geometry from the city itself.

For the record, the same cannot be said about any flat, two-dimensional map of the western hemisphere, by the Theorema Egregium.

This being said, we haven’t yet established the mathematics necessary to talk about tangent planes of the Earth. As a first step, we would need to establish a theory of higher-dimensional differentiable functions.

The derivative, in many variables

For example, let’s consider a function . This means that our function takes two inputs

and produces a single output

. What should the “derivative” of such a function be? In the one-dimensional case, the derivative was just a number, and this number represented the slope of the tangent line, so a good start is to expect that the derivative of our

ought to measure the “slope” of a tangent plane.

Therefore, declare to be differentiable at a point

if near this point, the function looks approximately like a plane in 3D space

Based off of the same discussion in the one-dimensional case, it’s natural to expect that the “derivative” of should encode the coefficients

and

. In particular, the “derivative” should no longer be a single number, but rather a two-dimensional vector.

Understanding one-dimensional derivatives turns out to be sufficient for determining what the coefficients and

should be. To illustrate, let’s determine

. The trick is to think of

as an input variable, and think of

as a fixed constant, so that

is a one-variable function. Then, our “planar approximation”

reduces to a linear approximation

for the function . Therefore, the coefficient

—being the slope of this linear approximation—must be the derivative of

with respect to

. Since this computes part of the derivative for the two-variable function

, we call this the partial derivative of

with respect to

:

Entirely analogously, the coefficient is the partial derivative of

with respect to

:

In summary, a two-dimensional differentiable function admits a planar approximation (which is actually still just called a linear approximation) of the form

Note. By taking the changes in and

to be very small again, you obtain the definition of the differential of a two-dimensional function

, which is

.

The two-dimensional vector which collects these partial derivatives is called the gradient of and denoted

, and this serves as the “derivative” of

.

The story is exactly the same for higher-dimensional functions . Write

for the vector of inputs to our function, so that

. If

is differentiable, then we can compute its partial derivatives

for every

, and these give us the linear approximation

Using Sigma notation, this equation can be written more briefly as

and the gradient (“derivative”) of is the

-dimensional vector

It’s more useful to think of as a (row) vector rather than just a list of numbers. Indeed, one advantage is that it makes computing directional derivatives much easier. The partial derivative

describes the rate of change of

as you vary

specifically: in other words, it is the slope of the line that lies tangent to the graph of

and points in the direction of

. Therefore, we can think of it as a directional derivative of

in the direction

. If you wanted to compute the derivative of

in the direction of an arbitrary* vector

, the precise definition is the familiar-looking limit

However, with the gradient vector of a differentiable function, you can compute the directional derivative more simply as the dot product .

*Technical remark. The direction for a directional derivative should be a unit vector. However, if is not a unit vector, then you can think of

as being the rate of change of

when running with velocity

per unit time.

A related advantage of taking the gradient to be a (row) vector rather than just a list of numbers is that it allows us to rewrite the linear approximation of with the dot product as

showing how the multivariable story is very much the same as the one-dimensional story we started with.

Remark. I am aware of the unfortunate notation clash. When I write , I am definitely not referring to the Laplacian.

Moreover, this presentation of the linear approximation suggests that we shouldn’t think of the derivative of a function as a mere number, but rather as a linear transformation. This becomes particularly handy when you try to generalise the derivative further to handle a multivariable vector-valued function . Now, the total derivative of the function

at a point

is the unique linear operator

such that

Equivalently (if you like to bring limits into the story), the total derivative is the unique operator such that

Write as a (column) vector, then each

is a multivariable differentiable function. Therefore, we can apply our knowledge of gradients to determine what the total derivative of

looks like row by row. Since

is a linear operator, fix the standard bases for

and

, then

admits an

matrix representation

which is called the Jacobian of .

This more general theory of differential calculus is quite versatile, and allows us to compute tangents and linear approximations for many things. For instance, we can model the hills or mountains with a smooth function , where

tells you what the elevation level is at coordinates

, and differential calculus can tell us how steep these hills can get, where the peaks and troughs are, etc. For another example, we can model the trajectory of a particle with a smooth function

, where

describes the coordinates of the particle in 3D space at time

, and differential calculus can tell us the velocity of the particle at any point in time.

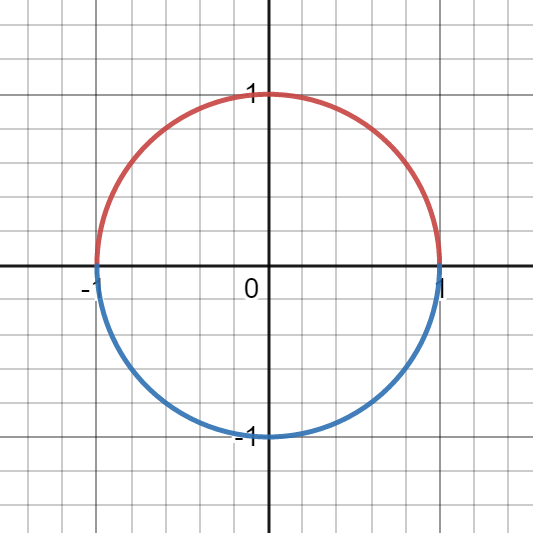

However, we still haven’t quite developed enough mathematics to talk about the surface of the Earth. The issue with smooth geometric shapes—such as ellipses, spheres, tori (i.e., doughnuts), or any kind of blob existing in higher-dimensional space—is that they are typically not graphs of functions. For example, a unit circle centred at the origin has two -values for every

, so it’s more appropriately plotted as two functions:

(red) and

(blue):

This almost seems to solve the issue: you can then use differential calculus on the two functions to compute tangent lines and the whole works. However, this isn’t perfect: even just on the circle presented above, we cannot use the function decomposition to compute the tangent lines where or

: neither function is actually differentiable at these points. What’s also an issue is that the tangent lines at these points are vertical, and thus cannot be described as functions of

.

“The Earth isn’t flat, but it’s close”

The idea is so close to working, though, so the idea is to modify this kind of deconstruction so that it doesn’t depend on how we draw our geometric shape in Euclidean space (). This leads to the idea of a smooth manifold. Loosely speaking, a smooth

-dimensional manifold (also called a smooth

-manifold) is a shape

such that for every point

, there is a way to smoothly transform a region of

close to

into a subspace of

.

For example, consider the unit circle again: this should be a smooth 1-manifold, so consider the point at the north pole. The figure below shows how to smoothly transform the upper semicircle into a straight line:

These smooth transformations allow you to define a local coordinate system on your manifold; that is, when you’re near a point of your

-manifold, you can describe your position relative to

with ordinary Euclidean coordinates

. The fact that the surface of the Earth is a smooth 2-manifold is why a map of the city can be navigated using a compass (i.e.,

and

coordinates).

Using the map analogy, you can equivalently describe a smooth -manifold as a space that can be covered with

-dimensional “maps” of regions of the manifold, such that the “maps” agree where they overlap on the manifold. The local coordinate systems are then the coordinate systems of these “maps.” Appropriately, the collection of “maps” on a smooth

-manifold is called an atlas.

Formal definition (skippable). A smooth -manifold is a Hausdorff and second-countable topological space

equipped with a smooth atlas, which is an open cover

paired with a family of open embeddings

called charts. These charts are required to be smoothly compatible in the sense that the transition maps

are

-functions (meaning they are infinitely differentiable: we can take arbitrarily high-order mixed partial derivatives).

Let be smooth manifolds (of possibly differing dimensions), with respective atlases

and

. A continuous function

is a smooth map of manifolds if the maps

are

-functions where defined.

The atlas provides us with a local coordinate system: for a point , we get coordinates

through the embedding

where

: more precisely, the coordinates of a nearby point

(i.e.,

) in this coordinate system is just the coordinates of the vector

. If

is a map of smooth manifolds, then it induces

functions on the local coordinates (which reduces its theory to the multivariable calculus described earlier).

Now, suppose we have a smooth function of manifolds. If

and

, then at any point

we can find a local coordinate system

around

, and a local coordinate system

around

so that (locally) this smooth function looks like a smooth map

.

Therefore, we can reuse the theory already developed for derivatives of multivariable functions to compute the total derivative of at this point and get a linear operator

. Since derivatives are a local property, this should be the correct “derivative” of our smooth function of manifolds, but what does it mean?

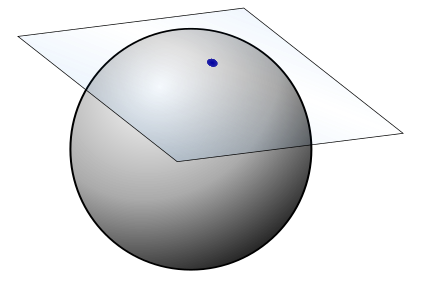

Remember that we first interpreted the total derivative of an ordinary multivariable function at a point as the best linear approximation of the function at the chosen point. This made a lot of sense in Euclidean space because this space is inherently “linear” itself. Now, we’re on a general manifold—there’s no natural “linear” structure on an entire sphere. But, maybe we can find linear structure locally: if we’re going to look at our function closely around a point, then we might as well do the same to our manifold.

The atlas on a manifold is enough to convince tiny conspiracy theorists living there that the world is flat, so zooming in this far, it almost looks like the manifold is a vector space! However, it’s not: a vector space goes off to infinity in every direction, but a local coordinate system is only good for a relatively small piece of our manifold. Consider the 2-manifold that we call Earth. With an atlas of the globe, we have local two-dimensional coordinates that we Earthlings typically refer to as the compass directions*. They’re “local” because they only make sense for a certain distance, after which the direction stops really making sense. For example: I can say that Saskatchewan is to the east of Alberta, and even that Québec is to the east of Alberta. However, if I “keep going east,” it becomes less apparent: is Germany to the east of Alberta? What if I go so far “east” that I wrap the Earth and end up in British Columba… does that make it east of Alberta?

*Technical remark. The compass directions don’t actually make sense at the north and south poles, but they work as local coordinates everywhere else. Just use it as a fairly robust analogy of what local coordinates are—you can’t define a smoothly varying local coordinate system on -dimensional spheres thanks to the hairy ball theorem.

The problem is that the compass directions aren’t actually supposed to curve with the Earth: they should instead span a flat plane that shoots off the Earth from your reference point:

As you can see, what we really want is to look at the tangent spaces on our manifold! The local coordinates are not directly part of the tangent space since they are glued to the manifold itself and stop making sense if you move too far away from your starting point (because they’re local coordinates). How, then, do we escape the confines of our manifold?

The derivative of the Earth

Well, we do it by running. Imagine you’re running in a straight line on Earth, and then suddenly gravity “turns off.” What happens? You’ll start shooting off in the direction you were running (i.e., in the direction of your velocity vector): this direction sits tangent to the Earth! This is exactly how the tangent space on a manifold is defined: the tangent space at a point is the space

of all possible velocity vectors that could be realised if you were to run on

and step at the point

.

What does this mean more mathematically? Let’s say describes where you are during your run on the manifold at time

. For simplicity, let’s say you are at point

at time

(i.e.,

). Using the local coordinates around

, we are allowed to think of

as a smooth function

.

Just as in the beginning of this story, velocity is given as the derivative of position with respect to time, so the instantaneous velocity vector for at time

is simply the

-dimensional vector

It follows that all tangent spaces of an -dimensional manifold are

-dimensional vector spaces. In particular, consider paths

that run in the direction of the local coordinate

for each

. Their corresponding velocity vectors then form a basis of

.

Let’s apply this to the 2-manifold we call Earth. The tangent space is spanned by the velocity vectors and

, so this recovers our original realisation of its tangent space discussed earlier.

This technically completes the story of finding tangents on a manifold, but this sort of definition of the tangent space is… clunky. First, if given a tangent vector, it’s difficult to recover a path whose instantaneous velocity is given by this vector. Secondly, this definition seems to depend on our choice of local coordinates. Not every manifold has a natural or obvious local coordinate system at every point, so it would be better if the tangent space could be defined independently of any choice of coordinates.

To find a better definition, we work backwards: let’s suppose is a tangent vector of our manifold

at a point

. While we may not know what path

obtains

as its velocity vector, but we do know that

describes a direction from the point

. This enables us to talk about directional derivatives again! But this time, we are speaking of directional derivatives of smooth functions

, at least locally around

. Let’s denote this directional derivative by

.

If we think locally, a smooth function in the local coordinates around

looks like a function

, and so the directional derivative (as we have seen before) is simply given by the dot product:

. If

is the velocity vector of some path

, then it turns out that the directional derivative as-defined is exactly the same as measuring the rate of change of

at time

when you run along the path

, meaning that the directional derivative doesn’t depend on the choice of path! In mathematical symbols:

(This follows from the chain rule.) Therefore, we can redefine the tangent space as the space of “directional derivatives”

that act on smooth functions

. This is a path-choice-free, and coordinate-independent definition of the tangent space! Note that if you pick any local coordinate system near

, then this space of directional derivatives is spanned by the derivatives computed in the directions of each coordinate axis. These are exactly the partial derivatives of

with respect to the local coordinates! In other words, if

is a local coordinate system near

, then

has a basis given by the partial derivatives

.

Remark (skippable). The more precise definition of the vectors of the tangent space—that is, the more precise definition of “directional derivatives”—at on a manifold is that the vectors are the

-centred derivations. Notice that the product rule tells us that

In general, then, a -centred derivation is a linear operator

(that is, a linear transformation that sends

functions on our manifold to numbers) such that they satisfy Leibniz’s law:

.

Leibniz’s law is enough to imply that derivations only care about how functions behave locally (i.e., near

). At this scale, we can think of a smooth function

as a function of its local coordinates. Take the linear approximation of

: then

. Apply an arbitrary derivation

on

. Leibniz’s law causes the constant

and the higher order terms

to vanish under the derivation, so we are left with

This shows that all -centred derivations lie in the span of the partial derivatives

, showing that generalising from directional derivatives to derivations gives nothing new. This proves that defining the tangent vectors as

-centred derivations is equivalent to defining the tangent vectors as velocities of smooth curves running through

.

Let’s remember what we were trying to do: we had a smooth function of manifolds, and we wanted to make sense of its derivative at a point

. Now that we have established local linear structure on our manifolds using tangent spaces, we can now say: the derivative of the function

at a point

is the best linear approximation of the function mapping between the best linear approximations of

and

. More precisely, the derivative at the point is a linear operator of the tangent spaces

.

If you pick a local coordinate system of

and a local coordinate system

of

, then we get bases

of

and

of

, and the matrix associated to

then recovers the Jacobian! If you write out what this means explicitly, you get that

While perhaps not the easiest to see in this form, this is really just the chain rule back at it again. However, we don’t want this to be the definition of the total derivative of a smooth function of manifolds at a point: once again, this definition depends on our choice of local coordinates! Now that we have a coordinate-free definition of the tangent space, we should be able to find a coordinate-free definition of the derivative.

The elements of our tangent space correspond to “directional derivatives” that act on smooth functions

. If we take a smooth function

, then we can use

to realise it as a smooth function

. This allows us to act on it with our directional derivative

. Since directional derivatives ought to behave like derivatives, our knowledge of the usual chain rule suggests that

In particular, we can see that the derivative of acts by changing the direction of a directional derivative. Since the directions are what correspond to tangent vectors, this is consistent with what we expect: the derivative of

is a linear transformation of tangent vectors, and this induces an action on directional derivatives by taking a directional derivative pointing in direction

and spitting out the directional derivative pointing in direction

.

In particular, if you choose a cardinal direction , then the chain rule gives

which is exactly the same as what we had before: giving more meaning to

as just being an analogue of the chain rule.

Remark (skippable). The above computation uses the fact that is a local operator that isolates the

th coordinate of a function:

. To be fully explicit, the derivative of

acts on

-centred derivations

by

.

Excellent: after all this work, we have finally formalised how to take derivatives on (and of) Earth!

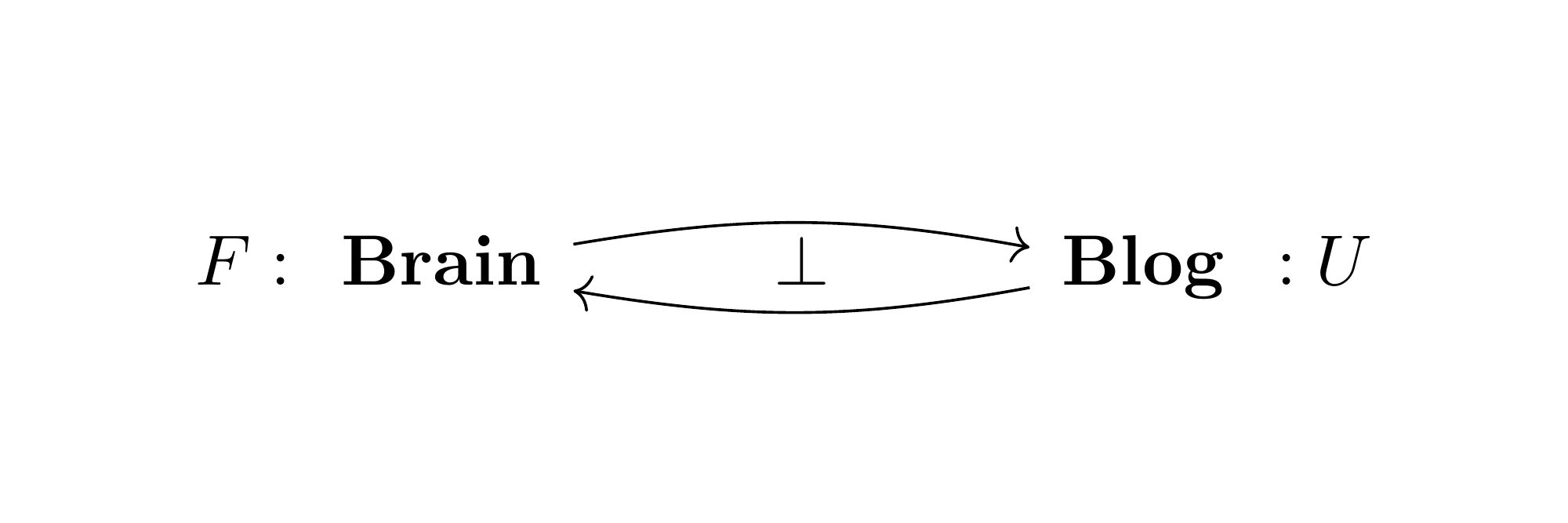

There’s just one thing left that seemed to get lost when we left the world of one-variable calculus: if we had a smooth function , its derivative was also a function! We emphasised that it’s better to think of

as a one-dimensional linear operator, rather than a number, but this number seems to vary smoothly with

. In fact, roughly speaking, the same continues to hold in the multivariable setting! How do we recover these phenomena in the setting of manifolds?

Well, the idea is to “bundle up” our tangent spaces and build a manifold of tangent vectors, so that the tangent spaces “vary smoothly” with

. This gives, for an

-dimensional manifold

a

-dimensional manifold

. Then, from a smooth function

, we get another smooth function

. The smoothness of this function reflects how the derivative

varies smoothly with

. A good visual aid for what this bundling achieves is given below:

We get a canonical projection map which sends a tangent vector to the point in

at which it is based (i.e., if

, then

). Therefore, the individual tangent spaces are realised as the fibres:

. The way to make this all formal is with the use of vector bundles:

Formal definition (skippable). A (real) vector bundle of rank over a manifold

is a manifold

equipped for every

with

- a smooth projection map

such that the fibre

is a

-dimensional (real) vector space

- an open neighbourhood

and a homeomorphism

called a local trivialisation. This is required to be compatible with the projection and the vector space fibres: for every

,

for all

is a linear isomorphism

The tangent bundle is then the vector bundle of rank

whose fibres are the tangent spaces

. The local trivialisation is clear: pick an atlas of

, then any

in the atlas induces a bijection

, so use them to endow

with topological structure (gluing the topological structures together using the transition maps between elements of the atlas[

MSE]).

Good job in explaining this. There’s one more definition of the tangent space at : the dual of

: the dual of  where

where  is the maximal ideal of (germs of) functions vanishing at

is the maximal ideal of (germs of) functions vanishing at  . The analogue of this one’s pretty useful in algebraic geometry. Make a post on that subject!

. The analogue of this one’s pretty useful in algebraic geometry. Make a post on that subject!

LikeLiked by 1 person

Thanks! I was thinking of doing a post on cotangent spaces whenever I find time, and I’ll definitely talk about when I get there. 🙂

when I get there. 🙂

LikeLike