You look out the window to see a vast blanket of dark clouds.

"It's going to rain soon," your sister says. "We should probably stay indoors today."

Your brother snorts. "It's not going to rain today!"

You turn to your siblings. "Well, those are the two options..."

Later that day, it snowed.

Over lunch

All integers are either even or odd… right? I mean, those are the only two possibilities: if a number isn’t even, then it must be odd!

Similarly, you would expect that all mathematical statements are either true or false. What else could they be? If a statement isn’t true, then it must be false… right? Well, this is certainly the case in classical logic, and this fact is given a name: the law of the excluded middle (LEM).

Classical logic refers to a “rule book” for how to reason in mathematics, and describes what kinds of deductions are allowed. For instance, the following three arguments follow the same logical rule:

If it rains, I will stay indoors.

It is raining.

Therefore, I will stay indoors.

Where there's smoke, there's fire.

There's smoke.

Therefore, there's fire.

If the function is differentiable, then it is continuous.

The function is differentiable.

Therefore, it is continuous.

The underlying rule here is called modus ponens: if we know that some mathematical statement is true, and

, then we may conclude that the statement

is true also. The law of the excluded middle is another rule: for any mathematical statement

, this rule asserts that “either

is true, or

is true.”

While this “law” seems obvious—in fact, all of the laws of classical logic are “obvious”—there are other logical rule books that do not accept the law of the excluded middle. The most common (but probably not well-known) example is intuitionistic logic. Ironically, it doesn’t seem at all intuitive that the law of the excluded middle might fail: how can neither nor

be true?!

The reason this is somehow possible is because an intuitionistic logician takes “true” to mean “provably true.” In other words, a logical statement isn’t taken to be true unless you can provide a construction or some other direct demonstration that the statement is true (this is why intuitionistic logic is sometimes called “constructive logic”). In a way, this is a more practical form of truth, because it can actually be tested. The reason the law of the excluded middle may fail now is because there is a middle: there are mathematical statements where neither

nor

can be proven to be true.

We can make more sense of intuitionistic reasoning by drawing parallels to the judicial system. In a court, the accused is pronounced innocent until proven guilty. Likewise, a logical statement in an intuitionistic framework is pronounced false until proven true. Classical logic would correspond to whether or not the accused truly is guilty for the crime, which is an unattainable ideal in the real world (since how else, if not with proof, would we know for certain that the accused is guilty of the crime?). In this analogy, it’s easier to believe that the law of the excluded middle can fail: there are many instances of criminals getting away with their crimes due to a lack of evidence.

On the bus

Frankly, I was pretty averse to intuitionistic logic when I was first exposed to it in undergrad: it’s not typically within the bounds of concern for a working mathematician (not even things regarding the validity of the axiom of choice or the like really matter to most mathematicians). To me, the theory of intuitionistic logic was nothing more than the result of taking the theory of classical logic, and removing all theorems that relied on the law of the excluded middle or the axiom of choice. In other words, using intuitionistic logic, from my point of view, was an artificial handicap to doing mathematics.

I vaguely understood “why” the law of the excluded middle was non-constructive. The law of the excluded middle allows you to argue via proof by contradiction: to prove that a result is true, it is enough to show that

leads to some nonsensical contradiction. For example, suppose I wanted to prove the following:

Proposition 1. There exists a real number such that

for any nonzero polynomial

with integer coefficients.

To get a sense what Proposition 1 is saying, here are some examples of values for that don’t work. First,

can’t be an integer: if

, then

where we take the polynomial

. A bit more generally,

can’t be rational: if

, then

where we take the polynomial

. Therefore, any

that satisfies Proposition 1 has to be irrational. This is not enough, though. For example, if we take the irrational number

, then

for the polynomial

. So, how do we prove that Proposition 1 is true?

Well, a proof by contradiction would be to suppose Proposition 1 is false. A number that does not satisfy Proposition 1 is called algebraic (therefore, the above paragraph proves that integers, rational numbers, and numbers like

are all algebraic). So, to prove that non-algebraic numbers exist, begin by supposing that every real number is algebraic. Now, let’s use this assumption to count how many real numbers there are. We have seen in a previous post (on the bus) using Cantor’s diagonal argument that there are too many real numbers than can be written out in an infinite list. On the other hand, the algebraic numbers can actually be listed out. Without going into too much detail, you can do this by:

- listing out all possible nonzero integer coefficient polynomials

- for every such polynomial in the list, replace it with the list of its (real) roots. This is always finite list for every polynomial because a polynomial

cannot have more roots (i.e., values

where

) than its degree.

Therefore, since we can write out every algebraic number in an infinite list, but we cannot do the same for all real numbers, it is impossible for all real numbers to be algebraic. In conclusion, Proposition 1 is true!

In more detail (if you care). Recall that denotes countable infinity—the infinity that can be listed out. For any finite number

, the number of degree-

polynomials with integer coefficients can be determined as follows. Such a polynomial has the form

, and we have

many choices for every

. Therefore, we have

possible polynomials. By that previous post (on the bus), this is equal to

. Now, any such polynomial can only have

real roots, so this means that there are at most

real roots of polynomials of degree

with integer coefficients. Again, this works out to be equal to

.

To count how many algebraic numbers there are in total, we can count the number of real roots of polynomials of degree with integer coefficients for every

. This totals to at most

algebraic numbers. In other words, there are countably many algebraic numbers, and therefore they can be written out in an infinite list.

With the above (relatively short) proof, you get that there must exist non-algebraic real numbers. In fact, it proves that almost every real number is not algebraic! However, it doesn’t give you an example of a non-algebraic number at all, despite how “common” they are in the real number line. Since the proof doesn’t explain how you may “construct” a non-algebraic number, this line of reasoning is not intuitionistic / constructive.

A more constructive proof would require providing an explicit example of a non-algebraic number (along with a proof that it is non-algebraic). You might be able to guess some examples: is non-algebraic, for instance. However, how would you prove that

is non-algebraic? You have to prove that

(it’s roughly

), and that

(it’s roughly

), etc… For any nonzero integer polynomial

, it must be that

. This ends up being significantly harder to prove than the mere existence proof given above![cf] However, it comes with the benefit that we have an actual example of a non-algebraic number, rather than leaving us with nothing but knowledge that these things are somehow in abundance.

Most people probably believe that all mathematical propositions have definitive truth values—i.e., they’re either true or false—and therefore that classical logic is a perfectly reasonable framework to do mathematics. Unfortunately, this belief becomes more questionable the deeper you look. First, as the above example might allude to, there are instances where certain mathematical objects are proven to exist (even in great abundance), but we still lack any explicit examples. One example[cf] is the following: it is known that almost every real number satisfies that the fractional part (i.e., the decimal part) of the numbers

are distributed evenly throughout the interval

, and yet we don’t have a single known (proven) example of such a number! To realise how absurd this is, “almost every” in this case implies that if you were to uniformly randomly choose a real number

between 1 and 2, you have a 100% chance of choosing an

satisfying this property… and yet we don’t have a single example of one. How can something really exist in that kind of abundance and still be impossible to find?

It gets even worse if you start thinking about Gödel’s Incompleteness Theorems: any consistent axiomatic foundation that is strong enough to encompass basic arithmetic will have propositions that cannot be proven to be true nor false. This includes the most common axiomatic foundations of mathematics (ZFC set theory). A famous example of such a proposition—as mentioned in my post about infinity—is the Continuum Hypothesis: ZFC is capable of neither proving nor disproving the following claim.

Continuum Hypothesis. If a set of real numbers cannot be enumerated in an infinite list, then it can be put in 1-to-1 correspondence with all real numbers.

This means that there are models of ZFC (i.e., foundations where the axioms of ZFC are true) where the Continuum Hypothesis is true, and also models of ZFC where the Continuum Hypothesis is false. With this in mind… how can the Continuum Hypothesis have a definitive truth value? Ultimately, the study of mathematics is less about what is true or false, but more about what is provably true or provably false. Therefore, despite how mathematicians will usually function with a classical logical mindset, the Continuum Hypothesis shows that this classical logic must at the very least be taken with a grain of salt.

In the lounge

A place where classical logic may not be applicable (that I’m curious about, but not at all familiar with) is within an elementary topos. A topos is basically a category that carries enough structure that its “internal set theory” (since all categories are basically set theories) is sufficiently similar to usual set theory in mathematics. To help myself understand this a bit better, I will be relying primarily on [Str03, Ch. 13] and [MM94, Ch. VI.5–7].

The relevant logics for the set theories are higher order and typed (they’re also intuitionistic). Being higher order allows us to reason with predicates using other predicates, and being typed allows us to control the domain of discourse when quantifying statements.

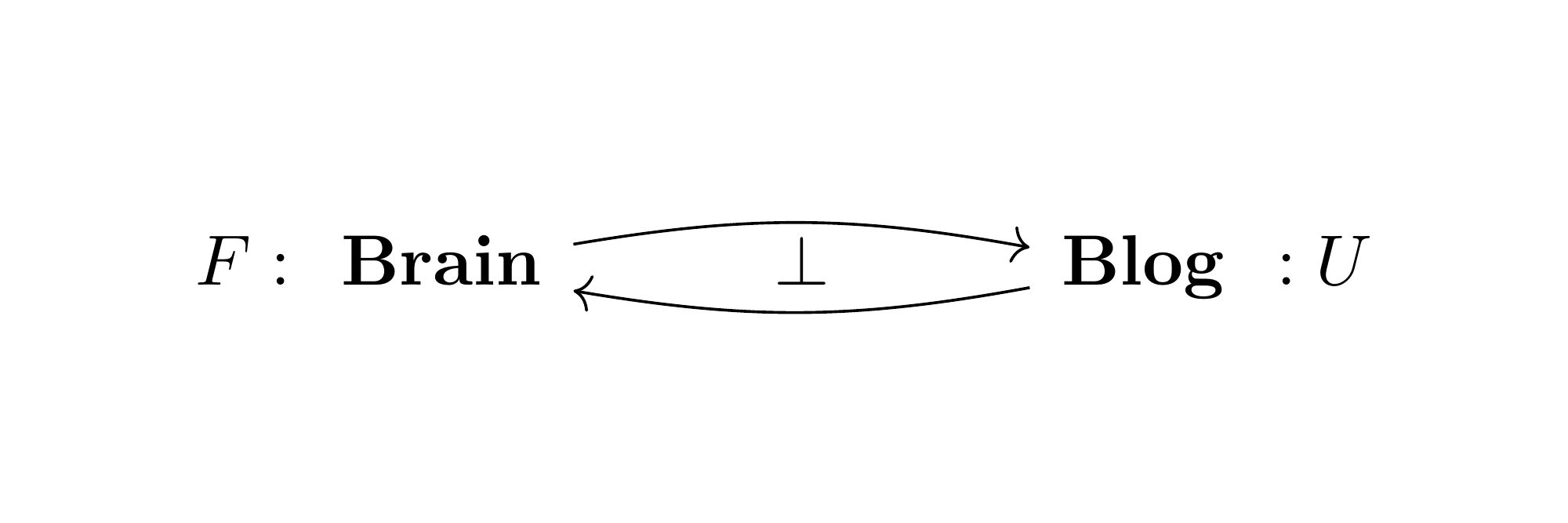

First, recall that an elementary topos is a finitely complete, cartesian closed category such that the subobjects functor

—where subobjects are taken modulo isomorphism—is representable by some object

appropriately called the subobject classifier. Intuitively,

is the “object of truth values” in

, and the natural isomorphism

tells us that we can identify “predicates” on

(i.e., morphisms

) with subobjects of

, where the subobject corresponding to

should be thought of as

. Conversely, the predicate corresponding to a subobject

should be thought of as the “characteristic map”

.

We have a well-defined subobjects functor because is finitely complete, as the functor sends morphisms to their pullback action (and pullbacks preserve monomorphisms). Moreover, the presence of pullbacks in

already makes

a meet semilattice (with the order relation being given by subobject inclusion, the top element being

, and the meet operation being given on

by

). In fact, since limits commute with limits, this means that the subobjects functor factors through the category of meet semilattices, meaning that

is an internal meet semilattice in

as well, equipped with a top element

, a conjunction

, and a partial order

(which is an “external implication” relation). It’s an external relation because the truth value of

is given by a truth value of the ambient (external) logic, and is not given by a “truth value” in

. An internal implication would have to be a morphism

.

More generally, the internal hom structure implies that a similar internal meet semilattice structure is given to all power objects since they internalise

. In a general power object, denote the external implication by

, the top element by

, and the conjunction by

. If the context is clear, the subscripts may be dropped.

For example, we can internalise “equality” in a fairly straightforward manner: to define an internal equality relation , just take the morphism corresponding to the diagonal subobject

. Given global elements

, the internal predicate

is only “true” if it coincides with

, and we can see that this happens if and only if

externally (the story is similar for generalised elements, but validity is checked by seeing if it factors through

; i.e., it coincides with

). However, if

, the truth value of

can be a plethora of things other than merely “false.”

Applying the above internalisation to the subobject classifier, we obtain an internalisation of “equivalence” . More generally, applying this to a power object

, we get an internalisation of “equivalence of predicates” on

given by

.

It turns out we can internalise implication as well: define the implication relation for predicates on as the composite

This internalises the statement that says the same thing as

. This is an internalisation of the external implication because for any predicates

, we get that

(i.e.,

) if and only if

factors through

. Moreover, this defines a Heyting implication:

if and only if

.

We can also internalise universal quantification , which is the higher order predicate corresponding to the subobject

adjunct to the true predicate

. Given a predicate

, we get that

for all generalised elements

if and only if

(the story is the same for generalised internal predicates

, where

if and only if

for all generalised elements

). This allows us to also write

as

or

.

This gives us a lot of the basic elements of the Mitchell-Bénabou language of . To handle the remaining connectives, we use the following trick:

must have some notion of “false” (otherwise it would fail to satisfy its universal property), so define the negative connectives by scanning

for this truth value and taking the infimum outcome.

Explicitly, define “false” to be

, and then define negation

via

. Similarly, we can define disjunction

as

, and similarly existential quantification

as

. These are basically given by de Morgan’s Laws.

The idea now is that the objects of are types, and provide context in which predicates (i.e., morphisms into the subobject classifier) can make sense. Given predicates

, we can read

as a sequent in the context

(which is really just an object of

).

The benefits of Mitchell-Bénabou language are that they can really help us think about objects of a topos more naïvely set-theoretically. For example, a morphism is a monomorphism iff

, and is an epimorphism iff

. The latter contrasts the usual co-elementary way of generalising surjections. Similarly, we can define a natural numbers object simply as an object

and morphisms

and

satisfying the Peano axioms:

(Note that membership is jut given by the evaluation counit of the power object.) This language also allows us to define a real numbers object in a topos that has a natural numbers object! Indeed, you just have to follow the usual sequence of steps:

, and then take Dedekind cuts. For example, starting with the category of sheaves over a topological space

, the natural numbers (resp. integers, rational numbers) object is given by the constant sheaf on the set of natural numbers (resp. integers, rational numbers), and the real numbers object is given by the sheaf of real-valued continuous functions on

.

Now, the appearance of intuitionistic logic comes when you think about the Law of the Excluded Middle: . The Law of the Excluded Middle holds if and only if our topos

is Boolean, meaning that

is a Boolean algebra for every object

(i.e., every subobject has a well-defined complement). Indeed, these are equivalent to stating that

(and thus any power object) is an internal Boolean algebra, in which case the complement is given by negation. As you might then expect, not all topoi are Boolean.

To see this, note that internalises the Heyting algebra

of subterminal objects in

(put another way, the subterminal objects correspond to truth values of

). This has to be a Boolean algebra (i.e., negation has to serve as a complement) if

is Boolean. For instance,

in

is a Boolean algebra.

If we take an over topos (where the subobject classifier is explicitly given by the projection

), the terminal object is the identity

, and so subterminal objects are precisely the subobjects of

. For instance, if we take the topos of

-graded sets

(where this equivalence comes from taking fibres, or more abstractly by mumbling about Grothendieck), then we get

-many truth values. However, this is still a Boolean topos since

is a Boolean algebra, so this shows that Boolean topoi need not have only two truth values.

Taking a general Grothendieck topos , the subterminal sheaves correspond to subsets

of objects of

such that

whenever

and there exists a morphism

.

- conversely, when given a covering sieve

such that

for all

in

, then also

.

In other words, the truth values of correspond to “local regions” in

, which can be thought of as maximal regions where a proposition can hold over

locally. For instance, if we take a topos of sheaves over a topological space

, then this reduces to the lattice

of open subsets of

. Here, we can see that Grothendieck topoi are generally not Boolean: negation is given by taking the interior of the set-theoretic complement of an open subset (and disjunction is given by union), from which we can see that

fails to be Boolean if

has open subsets that are not closed. The fact that the internal logic may not be Boolean (and therefore may not satisfy an appropriate internal axiom of choice) leads to why we use the more choice-free construction of real numbers (as opposed to the Cauchy completion, as its elements are equivalence classes of sequences and would require choice to be recovered from the Dedekind cuts, whereas the converse direction is choice-free). Indeed, the Cauchy real numbers object in a category of sheaves over a topological space

is (I think[cf]) given by the sheaf of locally constant real-valued continuous functions on

(and is thus strictly smaller).

Lastly, I want to say a bit about Kripke-Joyal semantics, which are related to forcing (tying things back to provability theory). The forcing relation, denoted where

is a predicate and

is a generalised element of

, holds precisely if

factors through

(equivalently, if

). We then immediately have two basic properties:

- monotonicity: if

, then

for any

- local character: conversely, if

and

, then

.

Intuitively, these make the forcing relation appear to assess truth as a local property: the first suggests that truth continues to hold when taking a subset, and the second suggests that truth is preserved by coverings. Moreover, we get the following.

Theorem 2. Let be predicates on

, and

a generalised element of

. Also, let

be a bivariate predicate. Then,

iff

and

iff there exist jointly epic

and

(i.e.,

) where

and

iff

implies

for any

iff the only time

for some

is when

.

iff

for some

and some generalised element

iff

for every

and every generalised element

. This is moreover iff

.

Notice that disjunction (and existence) highlights the local / intuitionistic nature of the internal logic. In fact, if we restrict our attention to a Grothendieck topos , this “locality” intuition becomes more explicit:

Theorem 3. Suppose are sheaves and

for some

. Then,

iff

and

, as before

iff we can find a covering

such that

or

for each

iff

implies

for every

(in

!)

iff the only time

for some

(in

) is when the empty family covers

iff there exist a covering

and

such that

for every

iff

for all

(in

) and all

One thought on “Yes, no, maybe so”