It really is something, especially since in the very homepage, I refer to this blog as the category of algebras for the “free thinking” monad… I have an ulterior motive for writing this post, so this isn’t going to be the best introduction to monads you’ve ever seen. If I find time, maybe I’ll give monads a proper post.

So anyway, what is a monad? There are many equivalent and terse ways of defining them floating around, for example:

Definition 1. A monad on a category is a monoid in the category of endofunctors over

.

This definition is a convenient way of remembering the axioms of a monad: they’re the same as a monoid. To be more explicit: a monad is a functor endowed with a unit

and a multiplication

such that the unit acts by identity under multiplication, and multiplication is associative.

Even though the axioms themselves aren’t too challenging, monads can be an incredibly difficult concept to digest, since it really puts your ability to think abstractly on the spot. Sure, a monoid is easy enough (a set with an associative, unital multiplication), but for a monad, you replace the set with a functor! What’s a good way to think about monads then? Well, don’t quote me, but for the purposes of this post at least, this should be sufficient:

If is an object (viewed as a set of things) of the category

and

is a monad, then

can be thought of as the object of certain “constructions” performed formally (formal = no “real world” considerations; that is, done freely) on

. The unit map

reflects how everything “real” in

can be viewed as “formal,” and the multiplication map

reflects how anything that is “formally formal” is just “formal.”

To see what I mean, consider a few examples:

- The Kleene star monad

over sets

- This takes a set

and gives the collection of all finite lists of elements of

; that is,

- The monad unit realises every element

as the one-element list

- The monad multiplication takes a list of lists and concatenates them all as a single list: for example

.

- This takes a set

- The “free

-module” monad

over sets

- This takes a set

and gives the collection of all formal

-linear combinations of elements of

; that is,

(modulo obvious relations like

)

- The monad unit realises every element

as the trivial formal linear combination

.

- The monad multiplication observes that a formal linear combination of formal linear combinations can be made into just a one-step formal linear combination: for example

.

- This takes a set

- The group

algebra monad

over groups

- This takes a group

and gives the collection of all formal

-linear combinations of elements of

as above, but with multiplication induced by the multiplication of group elements. More precisely,

is the module

where the multiplication is given by

.

- The monad unit and multiplication are as before.

- This takes a group

- The maybe monad

on sets (or types)

- This takes a type and returns a type that is extended to include an “error” instance (at least, this is the interpretation). This means that a function whose output type is

either produces an element of

, or throws an error. Mathematically, the monad is very simple: it is

(where the new element is the “error” element).

- The monad unit just observes that the type is extended: everything in the original type is still there.

- The monad multiplication merges two “error” elements to be the same thing; that is, it is the projection

sending both

.

- This takes a type and returns a type that is extended to include an “error” instance (at least, this is the interpretation). This means that a function whose output type is

- For a weird example (i.e., stretches the interpretation I gave): the abelianisation monad

over groups

- This takes a group

and gives the abelianisation

, which is the result of forcing all elements of

to commute.

- The monad unit is the canonical projection

, sending every group element to itself in the abelianisation.

- The monad multiplication in this case is actually an isomorphism

. It says that nothing changes if you abelianise a group that is already abelian.

- This takes a group

Notice that “formal” and “free” mean very similar things (at least mathematically). This is no mere coincidence. In some sense, any pair of adjoint functors can be thought of as a free-forgetful adjunction (see, for instance, an older post), and…

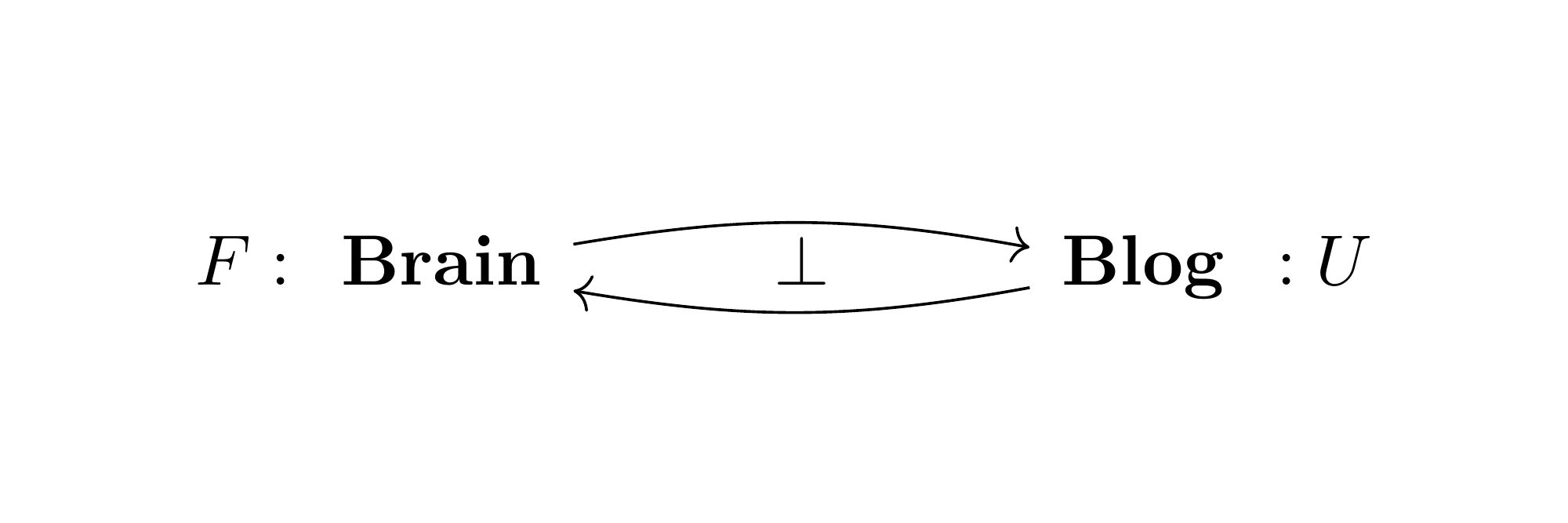

Observation 2. Given an adjoint pair of functors , the composite

canonically defines a monad on

. The unit is precisely the adjunction unit

, and the multiplication is induced by the counit:

.

In fact, all of the above examples come from an adjoint pair:

- The Kleene star comes from the forgetful functor

, with left adjoint the “free monoid” functor. Hence, the Kleene star is sometimes referred to as the “free monoid” monad.

- The “free

-module” monad comes, unsurprisingly, from the forgetful functor

, being the left adjoint.

- The group

-algebra construction comes from the “group of units” functor

, being the left adjoint.

- The maybe monad comes as left adjoint to the forgetful functor

from pointed sets.

- The abelianisation monad comes as left adjoint to the forgetful functor

.

This, too, is no coincidence. Every monad comes from an adjoint pair! In fact, although adjoint functors can be viewed as free-forgetful pairs in a loose sense, monads can be viewed as monads of free-forgetful adjunctions in a much more precise way.

Algebras of a monad

Let be a monad over a category

. If we want to realise this as the “free/formal structures” monad coming from an adjunction, then we need a category whose objects are those of

equipped with the structure that

is allegedly building formally. This is achieved by the

–algebras:

Definition 3. An algebra over is an object

equipped with an “action”

such that the unit acts by identity (meaning

is the identity), and the action of a multiplication coincides with iterated action (meaning

is the same as

).

Intuitively, the action in a -algebra encodes a way of realising the “formal” constructions of

as truly living in

. The category of all

-algebras (and action-preserving morphisms) is the Eilenberg-Moore category

.

- An algebra

of the Kleene star monad is precisely a monoid, with the action defining the (multiary) multiplication. In particular, the binary product is given by

, and the monoid unit is given by the empty list

. The monad multiplication ensures that this product is associative and unital, while the unit ensures that the unary product doesn’t do anything:

. Therefore, the Eilenberg-Moore category of the Kleene star is the category of monoids

.

- An algebra of the free

-module monad is exactly an

-module, since it realises a formal linear combination as a true element. Therefore, the Eilenberg-Moore category is the category of modules

.

- An algebra of the group algebra construction is just a group algebra.

.

- An algebra of the maybe monad is a pointed set. The action absorbs the “error” state into the distinguished element.

.

- An algebra of the abelianisation is, surprisingly (to me), an abelian group. Indeed, the abelianisation unit (i.e., projection) is already surjective, and the action of an algebra has to be a retraction of the unit. This forces the unit to be bijective and thus an isomorphism of groups.

.

There is an obvious forgetful functor just given by forgetting the action, and the “free

-algebra” left adjoint is also not hard to construct. In fact, it is given by

: for any object

, the object

has a tautological action given by the monad multiplication

.

Monadicity

Although all monads come from adjunctions, the converse is false. Given an arbitrary adjunction , we certainly get a canonical functor

(induced by

, and this is in fact the unique such functor that respects the “forgetful” functors to

), but it need not be an equivalence. In the case that it is an equivalence, the adjunction (or, for brevity and convenience, just the right adjoint) is said to be monadic. For example, we have shown that: monoids are monadic over sets, ring modules are monadic over sets, group algebras are monadic over groups, pointed sets are monadic over sets, and abelian groups are monadic over groups.

For a simple non-example, just take the category of topological spaces and its forgetful functor . The left adjoint is the “discrete space” functor, but the induced monad is then just the identity monad on

. Therefore, topological spaces are not monadic over sets. Comparing this adjunction to all of the above (monadic) adjunctions, you might infer an intuition about monadicity: adjunctions tend to be monadic if they come from forgetting algebraic structure.

Note. Magically, if you restrict topological spaces to compact Hausdorff spaces, then you recover monadicity! More precisely, the forgetful functor admits a left adjoint (given by the Stone-Čech compactification on the set viewed as a discrete space). For a discrete space

, the compactification is given by taking the set of ultrafilters on

(the topology on

is generated by sets of the form

for

). The action of a

-algebra assigns to an ultrafilter (think: Cauchy “sequence”) a point (think: limit point) of

, so ultimately induces a compact Hausdorff topology on

. This ultimately shows that

.

So how do we know if a functor is monadic? I mean, besides constructing the Eilenberg-Moore category for the induced monad and hoping that the forgetful functor factors via equivalence through it.

Luckily, there is a more concrete way of verifying this.

Theorem 4 (Beck’s monadicity theorem). [Bor94(II), Thm 4.4.4] A functor is monadic if and only if

admits a left adjoint

creates coequalisers of

-split pairs (these are pairs of morphisms whose images under

admits a split coequaliser)

Proof. One direction is easier than the other. If is monadic, then without loss of generality, write

with left adjoint

, and write

. Suppose

make a

-split pair. This means that we have morphisms as in the diagram

such that and

are sections, and

is idempotent. This gives

canonical

-algebra structure: the action is given by

. This works because split equalisers are universal (i.e., preserved by all functors) and are in particular preserved by

and

, which ensures that this action respects the unit and multiplication of

(see [Bor94(II), Prop 4.3.2] for a detailed proof).

More interesting is the converse. We aim to prove that the induced functor (for

) is an equivalence. If you let

denote the full subcategory of free

-algebras (i.e., the Kleisli category), then we have a canonical map

given by sending

(which is well-defined by the zigzag identities), and the composite

is precisely the full inclusion. Therefore,

is full.

Let be a

-algebra, then the pair

is

-split (under

, this pair becomes

, which has coequaliser

, and the adjunction unit as both sections). Thus, by assumption, we can construct a coequaliser in

, and preservation ensures that the image of this colimit under

is precisely

in

.

To see that is faithful (completing the proof), it suffices to prove that the adjunction counit is an epimorphism. This is because the zigzag identities make the pair

out to be

-split, and the image under

has coequaliser

, therefore lifting to a coequaliser

, as desired.

Why monads?

Rather than “why monads,” the better question is probably “why monadicity?” I’m sure monads themselves have many useful properties (that I have not yet seen), but monadicity can be convenient. The most evident advantage is that monadicity of over a category

allows you to reason with the objects of

as structured objects of

in a very precise sense (in fact, the structure even algebraic in a way). This is particularly useful when working with categories that are monadic over sets.

Through this, one can hope to lift many properties of to the monadic category. For example, general forgetful functors (i.e., right adjoints) preserve limits, but monadic functors create limits. On the other hand, the forgetful functor reflects any colimit that is preserved by the monad. If

is cocomplete, then so is

once it has reflexive coequalisers (in particular, every monadic category over sets is complete and cocomplete).

Related to this, we can also hope to lift adjunctions through monadic functors.

Theorem 4 (Adjoint triangle theorem). [SV10, Lemma 2.1] Suppose is an adjoint pair such that the adjunction counits are all regular epimorphisms (i.e., coequaliser maps; in fact, it is equivalent to asserting that the diagrams

are coequaliser diagrams. Since the parallel arrows have a common section given by the unit

, these are reflexive coequalisers). Note that this condition is met by any monadic functor

.

If is a category with all reflexive coequalisers, then a functor

has a left adjoint if and only if

does, as in the picture

Proof. Adjunctions are closed under composition, so one direction is clear. Suppose we have a left adjoint , then we wish to construct a left adjoint of

. By assumption, every counit map arises from a relfexive coequaliser

. Therefore, the left adjoint is defined on any

as the (reflexive) coequaliser

. To see why this works, apply several adjunctions:

where every row is a (reflexive) equaliser, applying the Yoneda lemma.

As a corollary, we get the more convenient result:

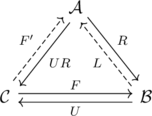

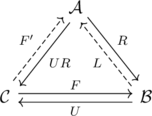

Theorem 5 (Adjoint lifting theorem). Suppose is monadic over

, and

is monadic over

. Let

and

fit in the commutative square

If has reflexive coequalisers and

has a left adjoint, then

admits a left adjoint as well.

Proof. Draw the diagonal functor . Since

and the latter has left adjoints, we easily get a left adjoint to

. Therefore, by the adjoint triangle theorem applied to the upper-right triangle,

has a left adjoint as well.

Monadicity in action

The application which led me to want to spell out all of this stuff is the following result (which surprised me at first, but perhaps that just exposes my naïvité). Suppose is a monoidal category, and we have another (small, I guess) category

. Then, we can endow the functor category

with monoidal structure just by having the tensor product act pointwise. This is unsurprising, and what else is unsurprising is that the pointwise tensor product inherits many basic properties of the original one: being braided, symmetric, cartesian (thanks to limits being computed pointwise), etc.

What came as a surprise to me is that the pointwise tensor product inherits (right) closedness as well (under suitable conditions on )! Recall that a monoidal category is (right) closed if tensoring from the right admits a right adjoint:

for every

, which is called the internal hom. Naïvely, to endow the functor category with an internal hom, one might try to perform it pointwise: set

for

and

. However, this construction is not functorial: internal homs, like ordinary homs, are contravariant in the first variable. This is only successful if the domain category

were discrete, so that there is no action of morphisms to consider.

Nonetheless, we have the following result:

Proposition 6. If is a complete (right) closed monoidal category, and

is small, then

is closed monoidal under the pointwise tensor product.

Proof. Let be the canonical inclusion of the discrete subcategory spanned by the objects of

. Since

is complete, the induced map

admits a right adjoint

given by the (discrete) right Kan extension

Note that since both functor categories are complete, with limits computed pointwise, it follows that creates all limits, and so we may apply (the dual of) Beck’s monadicity theorem to conclude that

is comonadic. Therefore, (the dual of) the adjoint lifting theorem ensures that the right adjoint of every functor

(which we already know exists, given by pointwise internal hom) lifts to a right adjoint to the functor

for every

.

Remark. is also monadic over

via the (discrete) left Kan extension. The proof is the same: both categories are cocomplete with pointwise colimits, so Beck’s monadicity theorem applies.

But what does the internal hom look like? This is what motivated the post.

The most systematic way of working it out is to just retrace the relevant proofs and hope it gives a legible formula. First, the main ingredient: the adjoint triangle theorem. Using the notation as in the theorem:

Assuming that the left adjoint exists, then the functor

was defined pointwise on

as the coequaliser of the maps

and

where .

In our case, we need to apply this dually (though hopefully once is sufficient, since the forgetful functor just forgets the actions of morphisms and leaves the objects unchanged). Fix a functor , so the goal is to construct the right adjoint to

. Just as in the proof of the adjoint functor theorem, a right adjoint to the composite functor

is right at hand since

, where

and

is the pointwise internal hom in

.

Therefore, the right adjoint to is given by a (pointwise) equaliser

The first arrow comes from the adjunction unit given by the evident map

. The other map comes from the mate

given by the evident map

. In summary, for every

, the internal hom is the equaliser

Intuitively, the middle object collects morphisms for every

, and the upper arrow maps this family to the family of

for every

, while the lower arrow maps this family to the family of

for every

.

In particular, if you let , then diagrammatically, the elements of

are given by a choice of

along with a chosen extension

for every

such that for any

, we have commutativity of the diagram

In other words, the internal hom evaluated at gives partial information about natural transformations

that are possible, as can be “seen” from mapping out of

. Moreover, we can see how this construction is functorial: if

, then the map

just restricts families via the intuitive formula

. In particular, a true natural transformation

would be built up from the morphisms

as in the above diagram (naturality of this choice then following from the functoriality of

).

I can see why I couldn’t find an explicit formulation of the internal hom for the pointwise tensor product, but this formula is actually surprisingly satisfactory and concrete (literally, the intuition can be made precise if is a concrete category).